by Beth Stackpole

Feb 18, 2026

What you’ll learn:

- What agentic AI is and how it differs from traditional generative AI tools like chatbots.

- How organizations are already using AI agents to automate complex, multistep workflows.

- What leaders should consider when implementing agentic AI, including infrastructure, security, and human oversight.

Rewind a few years, and large language models and generative artificial intelligence were barely on the public radar, let alone a catalyst for changing how we work and perform everyday tasks.

Today, attention has shifted to the next evolution of generative AI: AI agents or agentic AI, a new breed of AI systems that are semi- or fully autonomous and thus able to perceive, reason, and act on their own. Different from the now familiar chatbots that field questions and solve problems, this emerging class of AI integrates with other software systems to complete tasks independently or with minimal human supervision.

“The agentic AI age is already here. We have agents deployed at scale in the economy to perform all kinds of tasks,” said Sinan Aral, a professor of management, IT, and marketing at MIT Sloan.

Nvidia CEO Jensen Huang, in his keynote address at the 2025 Consumer Electronics Show, said that enterprise AI agents would create a “multi-trillion-dollar opportunity” for many industries, from medicine to software engineering.

A spring 2025 survey conducted by MIT Sloan Management Review and Boston Consulting Group found that 35% of respondents had adopted AI agents by 2023, with another 44% expressing plans to deploy the technology in short order. Leading software vendors, including Microsoft, Salesforce, Google, and IBM, are fueling large-scale implementation by embedding agentic AI capabilities directly in their software platforms.

Yet Aral said that even companies on the cutting edge of deployment don’t fully grasp how to use AI agents to maximize productivity and performance. He describes the collective understanding of the societal implications of agentic AI on a larger scale as nascent, if not nonexistent.

The technology presents the same high-stakes data quality, governance, and trust and security challenges as other AI implementations, and rapid evolution could also propel organizations to adopt agentic AI without fully understanding its capabilities or having created a formal strategy and risk management framework.

“It’s absolutely an imperative that every organization have a strategy to deploy and utilize agents in customer-facing and internal use cases,” Aral said. “But that sort of agentic AI strategy requires an understanding and systematic assessment of risks as well as business benefits in order to deliver true business value.”

What is agentic AI?

While there isn’t a universally agreed upon definition of agentic AI, there are broad characteristics associated with it. While generative AI automates the creation of complex text, images, and video based on human language interaction, AI agents go further, acting and making decisions in a way a human might, said MIT Sloan associate professor John Horton.

In a research paper exploring the economic implications of agents and AI-mediated transactions, Horton and his co-authors focus on a particular class of AI agents: “autonomous software systems that perceive, reason, and act in digital environments to achieve goals on behalf of human principals, with capabilities for tool use, economic transactions, and strategic interaction.” AI agents can employ standard building blocks, such as APIs, to communicate with other agents and humans, receive and send money, and access and interact with the internet, the researchers write.

MIT Sloan professor Kate Kellogg and her co-researchers further explain in a 2025 paper that AI agents enhance large language models and similar generalist AI models by enabling them to automate complex procedures. “They can execute multi-step plans, use external tools, and interact with digital environments to function as powerful components within larger workflows,” the researchers write.

It’s an imperative that every organization have a strategy to deploy and utilize AI agents in customer-facing and internal use cases.

Sinan Aral Professor, MIT SloanShare

For example, an AI agent could plan a vacation using input from a consumer along with API access to specific web sites, emails, and communications platforms like Slack to decide what hotels or flights work best. With credit card permissions, the agent could book and pay for the entire transaction without human involvement. In the physical world, an AI agent could monitor real-time video and vision systems in a warehouse to identify events outside of normal operations.

“The agent could raise a red flag or even be programmed to stop a conveyor belt if there was a problem,” Aral said. “It is not just the digital world — agents can actually take actions that change things happening in the physical world.”

Aral draws a slight distinction between AI agents and the broader category of agentic AI, although most people still refer to the two interchangeably. He defines agentic AI as systems that incorporate multiple, different agents that are orchestrating a task together — for example, a marketplace of agents representing both the buy and sell side during a negotiation or transaction.

How are businesses using agentic AI?

Companies across sectors are starting to use AI agents. In the banking and financial services space, companies such as JPMorgan Chase are exploring the use of AI agents to detect fraud, provide customized financial advice, and automate loan approvals and legal and compliance processes, which could reduce the need for junior bankers. Retail giants like Walmart are building LLM-powered AI agents to automate personal shopping experiences and to facilitate time-consuming customer service and business activities such as merchandise planning and problem resolution.

“The benefit of agentic AI systems is they can complete an entire workflow with multiple steps and execute actions,” Kellogg said.

One particularly important application for agents may be performing tasks that a human typically would — such as writing contracts, negotiating terms, or determining prices — at a much lower marginal cost.

“The fundamental economic promise of AI agents is that they can dramatically reduce transaction costs — the time and effort involved in searching, communicating, and contracting,” said Peyman Shahidi, a doctoral candidate at MIT Sloan.

AI agents can also provide economic value by helping humans make better market decisions, according to Horton. His research with Shahidi about agents engaging in economic transactions argues that people will deploy AI agents in two scenarios:

- To make higher-quality decisions than humans, thanks to fewer information constraints or cognitive limitations.

- To make decisions of similar or even lower quality than the choices humans would make, but with dramatic reductions in cost and effort.

In markets with high-stakes transactions, such as real estate or investing, AI agents can analyze vast amounts of data and documentation without fatigue and at near-zero marginal cost, Horton and his co-authors write. In areas that involve a lot of counterparties or that require a substantial effort to evaluate options — startup funding, college admissions, or B2B procurement, to name a few — agents deliver value by reading reviews, analyzing metrics, and comparing attributes across a range of options.

“AI agents don’t get tired and can work 24 hours a day,” Horton said.

His research also shows that AI agents can provide value in situations where there are information asymmetries, like shopping for insurance or a used car online, by continuously monitoring myriad information sources, cross referencing data, and immediately identifying discrepancies that would take humans hours to uncover. AI agents could transform home buying or estate planning by giving users the collective experience of millions of transactions to enrich their negotiations.

Aral’s research has found that when humans work with AI agents, such pairings can lead to improved productivity and performance.

What should organizations bear in mind when implementing agentic AI?

While best practices for implementation are still evolving, keep the following in mind to ensure success with AI agents:

Remember that implementation is often the heaviest lift.

Making agentic AI work in practice can involve unexpected challenges. Kellogg and colleagues’ 2025 research paper describes the use of an AI agent to detect adverse events among cancer patients based on clinical notes. The biggest challenge wasn’t prompt engineering or model fine-tuning — instead, the researchers found that 80% of the work was consumed by unglamourous tasks associated with data engineering, stakeholder alignment, governance, and workflow integration.

Converting data into standard, structured formats for AI agents is especially important, because it helps them identify different data sources and requirements while maintaining consistency. Establishing continuous validation frameworks and robust API management, as well as working with vendors to ensure that they’re up-to-date on the latest model versions, is also crucial to agentic AI’s ability to run smoothly.

Other areas to pay attention to include putting the right regulatory controls in place, implementing guardrails to prevent prompt and model drift, and defining clear outcomes and key performance indicators at each phase of deployment. Establishing metrics aligned to key business goals is also important, because benefits from agentic AI can be misconstrued. “Just because an agentic AI model reclaims 20% of someone’s time, that doesn’t mean it’s a 20% labor-cost savings,” Kellogg said.

Consider the “personality” of AI agents.

In a large-scale marketing experiment, Aral’s research team found that designing AI agents to have personalities that complement the personalities of other agents and human colleagues led to better performance, productivity, and teamwork outcomes. For example, people who have “open” personalities perform better when working with a conscientious and agreeable AI agent, whereas conscientious people perform worse with agreeable AI.

“Human teams perform better or worse depending on the types of people assembled on the team and the combinations of personalities,” Aral said. “The same is true when adding AI agents to a team.” An overconfident human would benefit from an AI agent that pushes back, but that same agent personality type might not have a positive effect on a less-confident individual.

Embrace a human-centered approach to decision-making.

Aral’s research also found that AI agents can struggle with tasks that humans typically do easily, such as handling exceptions, and their decision-making remains poorly understood. In part, this is because AI agents are trained to take specific actions in given situations.

“You have to make sure the agentic decision-making is aligned with a human-centered decision process,” Aral says.

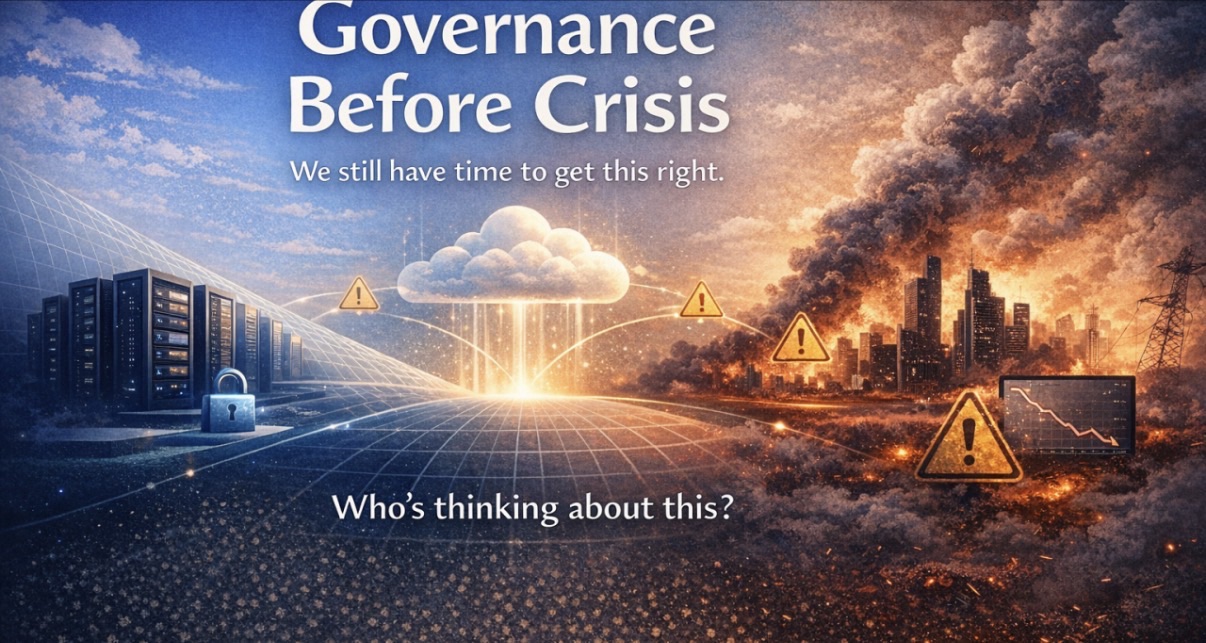

What are the risks of agentic AI?

There are a host of challenges that you need to be aware of as agentic AI matures. These include:

- Irregular reliability and unethical behavior. A rogue AI agent deciding to reject a mortgage loan or college admissions decision based on faulty information can do just as much damage — or more — than simple hallucinations. “You need to be able to explain business decisions and consistently apply the same standards to every case,” Aral said.

- Cybersecurity. As AI agents gain permissions to access different datasets and enterprise systems to automate tasks, don’t underestimate the importance of building robust permission-based systems, Kellogg said.

- Accountability. Organizations need to clearly delineate who bears responsibility when agentic AI makes an error or causes harm, Kellogg said. They should pay special attention to the possibility of system malfunctions, especially if the AI agent is autonomously performing workflows with minimal or no human supervision.

While the full risk picture is still murky, organizations need to make monitoring a permanent operational expense, not a one-time project cost, Kellogg said. A governance board should be established at the organizational level to oversee accountability while, specific responsibilities — monitoring and enforcing safety rules, for example — should be delegated to key individuals.

“As you move agency from humans to machines, there’s a real increase in the importance of governance and infrastructure to control and support agentic systems,” Kellogg said. And demonstrating success remains one of the biggest challenges — and risks — to agentic AI success. “Without shared, robust metrics, it’s difficult to prove value — or even to know whether these systems are truly accomplishing desired outcomes rather than inadvertently introducing new risks,” she said.

Next steps

Read about four recent studies about agentic AI from the MIT Initiative on the Digital Economy.

Read more about agentic AI in MIT Sloan Management Review:

- “The Emerging Agentic Enterprise: How Leaders Must Navigate a New Age of AI”

- “Agentic AI: Nine Essential Questions”

Read the research briefing “Business Models in the Agentic AI Era,” from the MIT Center for Information Systems Research.

Browse the AI Agent Index, a public database from the MIT Computer Science and Artificial Intelligence Laboratory that documents agentic AI systems that are in use.

Register for the MIT Sloan Executive Education course AI Executive Academy to learn more about applying AI strategy in your organization.

Sinan Aral is a global authority on business analytics and is the David Austin Professor of Management, Marketing, IT and Data Science at MIT Sloan; director of the MIT Initiative on the Digital Economy; and a founding partner at the venture capital firms Manifest Capital and Milemark Capital. His research focuses on applied AI, social media, and disinformation.

John Horton is the Chrysler Associate Professor of Management and an associate professor of information technologies at the MIT Sloan School of Management. His research focuses on the intersection of labor economics, market design, and information systems. He is particularly interested in improving the efficiency and equity of matching markets.

Kate Kellogg is the David J. McGrath Jr. Professor of Management and Innovation at the MIT Sloan School of Management. Her research focuses on helping knowledge workers and organizations develop and implement predictive and generative AI products to improve decision-making, collaboration, and learning.

Peyman Shahidi is a PhD candidate at MIT Sloan. He studies market design and labor economics, with a focus on the effects of AI on labor markets and online platforms.

Article link: https://mitsloan.mit.edu/ideas-made-to-matter/agentic-ai-explained