Article link: https://crsreports.congress.gov/product/pdf/IF/IF10429/10

Archives

All posts for the month December, 2021

Jared Serbu

After having spent several years tinkering with the Defense Department’s acquisition rules, Congress is turning its attention to one of the other main factors that bogs down the DoD procurement system: The byzantine apparatus the Pentagon and lawmakers use to actually fund each military program.

In the crosshairs is what’s known as the Planning, Programming, Budgeting and Execution (PPBE) process, an early Cold War-era construct that, translated to the modern era, means Defense officials usually wait at least two years after they realize they need a new technology before money arrives to start solving the problem.

The 2022 Defense authorization bill President Joe Biden signed this weekcontains two separate provisions aimed at tackling the PPBE predicament. One sets up an expert commission to take the current process apart and come up with alternatives. A second orders DoD itself to create a plan to consolidate all of the IT systems it uses to plan and execute its budget.

The current stringent, timeline-focused process has its roots in the late 1950s and early 1960s, when Defense reformers, including former Secretary Robert McNamara, were centralizing the Pentagon’s control over the military services and applying then-modern management techniques to run the world’s biggest bureaucracy.

“And one of the relics of those days gone by is the current DoD budget process,” Sen. Jack Reed, the chairman of the Senate Armed Services Committee said at a hearing earlier this year. “It was a product of McNamara, the Whiz Kids, and I can assure you those Whiz Kids are not kids anymore. It is 70 years.”

Under the new law, the new commission will start to take shape in February, and will have until September 2023 to deliver a final report to Congress and the Defense secretary. Its 14 members will be chosen by Defense Secretary Lloyd Austin, top House and Senate leaders, and the chairmen and ranking members of each of the congressional defense committees.

The long time horizon may have something to do with the fact that the commission’s task is massive. Its members, each of whom must be experts on budgeting or management, are being told to examine DoD’s current budgeting processes from top to bottom, and then compare them against other federal agencies, private sector companies, and other countries.

And it’s not just the process of drawing up budgets the commission will concern itself with. After all, that’s only the “B” in PPBE. It’ll also be tasked with scrutinizing the scrupulous, bureaucratic steps that start long before each year’s budget proposal, including the defense planning guidance and program objective memoranda (POMs) that eventually work their way into dollar figures for DoD planners and lawmakers to make decisions about how much to spend on each program.

Defense experts both in and outside of the Pentagon tend to believe the rule-intensive complexity involved in planning and requesting funding for DoD programs is one of the biggest impediments to speedy procurements, perhaps even more than the government’s acquisition rules themselves.

“Let’s say you find a great prototype someplace and you want to buy it. Well, did you have the foresight two years ago to plan it into your POM? If you didn’t, guess what? You have no authority to buy it,” Heidi Shyu, the undersecretary of Defense for Research and Engineering said in a recent interviewwith Federal News Network. “And let’s say you’re going to plan it into your POM. Well, in two years time, maybe you’ll get the money, but the technology is already several years old. The PPBE process is too sequential, too linear, too old-fashioned. It works really well if you’re moving at a very slow, very methodical, very risk-averse pace. But in today’s world, when competition against your adversaries is key, it’s got to change.”

And by some estimates, getting a new technology or product funded under the current system within two years is actually pretty optimistic.

In a February research paper, co-authors Bill Greenwalt and Dan Patt concluded the “best case” scenario under the PPBE process is actually more like seven years. That “best case” assumes DoD is using new, faster acquisition techniques like other transaction authority or middle-tier acquisition, getting money on contract almost as soon as it receives it, and getting Congressional appropriations on time instead of operating under a continuing resolution.

Under those circumstances, PPBE processes — not acquisition rules — are actually the “pacing element(s)” in DoD technology development, they wrote.

“The PPBE and accompanying appropriations process is the glue that holds the other elements of the process triad together and must be a priority for reevaluation,” according to the paper, published by the Hudson Institute. “These elements require that the DoD document any planned new capabilities, forecast milestones, and system performance years in advance. U.S. innovation time is lengthening in large part because of delays before production starts: In conceptualization, requirements, planning, and acquisition processes, and are driven in large part by the structure of the U.S. resource allocation process. Understanding these processes provides the motivation for reform.”

Officially, the new panel will be called the Commission on Planning, Programming, Budgeting, and Execution but it’s customary to name Congressionally-chartered expert groups after the section of the NDAA that created them, which would make this one the “Section 1004 Panel.”

A similarly-structured commission, theSection 809 Panel, which Congress created in 2015 to examine DoD’s acquisition regulations, also concluded that lawmakers wouldn’t achieve the streamlining they were looking for until they reformed the PPBE process. They reached that conclusion even after making 98 recommendations to Congress that focused mainly on procurement policies, dozens of which have been implemented.

One of the panel’s key diagnoses was that the three pieces of what’s often called the “Big A” acquisition system, meaning DoD’s requirements process, its budgeting mechanisms and its procurement bureaucracy, are actually three separate systems with completely different chains of command.

“Vague lines of authority and accountability result in a lack of transparency and access to accurate data. Stovepiped objectives fragment the system. Strategic objectives and investment decisions are misaligned, with execution driven by impractical timelines, strained personnel resources, and inadequate time for planning and debate,” the commission wrote in the second volume of its 2,000-page report to Congress. “To achieve its tactical and strategic goals, DoD needs to get the PPBE system right.”

Article link: https://www.redhat.com/en/open-source-stories

Dec 16, 2021

It’s about APIs; we’ll get to that shortly.

First there were containers

Now Docker is about containers: running complex software with a simple docker run postgrescommand was a revelation to software developers in 2013, unlocking agile infrastructure that they’d never known. And happily, as developers adopted containers as a standard build and run target, the industry realized that the same encapsulation fits nicely for workloads to be scheduled in compute clusters by orchestrators like Kubernetes and Apache Mesos. Containers have become the most important workload type managed by these schedulers, but as the title says that’s not what’s most valuable about Kubernetes.

Kubernetes is not about more general workload scheduling either (sorry Krustlet fans). While scheduling various workloads efficiently is an important value Kubernetes provides, it’s not the reason for its success.

Then there were APIs

Rather, the attribute of Kubernetes that’s made it so successful and valuable is that it provides a set of standard programming interfaces for writing and using software-defined infrastructure services. Kubernetes provides specifications and implementations – a complete framework – for designing, implementing, operating and using infrastructure services of all shapes and sizes based on the same core structures and semantics: typed resources watched and reconciled by controllers.

To elaborate, consider what preceded Kubernetes: a hodge-podge of hosted “cloud” services with different APIs, descriptor formats, and semantic patterns. We’d piece together compute instances, block storage, virtual networks and object stores in one cloud; and in another we’d create the same using entirely different structures and APIs. Tools like Terraform came along and offered a common format across providers, but the original structures and semantics remained as variegated as ever – a Terraform descriptor targeting AWS stands no chance in Azure!

Now consider what Kubernetes provided from its earliest releases: standard APIs for describing compute requirements as pods and containers; virtual networking as services and eventually ingresses; persistent storage as volumes; and even workload identities as attestable service accounts. These formats and APIs work smoothly within Kubernetes distributions running everywhere, from public clouds to private datacenters. Internally, each provider maps the Kubernetes structures and semantics to that hodge-podge of native APIs mentioned in the previous paragraph.

Kubernetes offers a standard interface for managing software-defined infrastructure – cloud, in other words. Kubernetes is a standard API framework for cloud services.

And then there were more APIs

Providing a fixed set of standard structures and semantics is the foundation of Kubernetes’ success. Following on this, its next act is to extend that structure to any and allinfrastructure resources. Custom Resource Definitions (CRDs) were introduced in version 1.7 to allow other types of services to reuse Kubernetes’ programming framework. CRDs make it possible to request not only predefined compute, storage and network services from the Kubernetes API, but also databases, task runners, message buses, digital certificates, and whatever else a provider can imagine!

As providers have sought to offer their services via the Kubernetes API as custom resources, the Operator Framework and related projects from SIG API Machinery have emerged to provide tools and guidance that minimize work required and maximize standardization across all these shiny new resource types. Projects like Crossplane have formed to map other provider resources like RDS databases and SQS queues into the Kubernetes API just like network interfaces and disks are handled by core Kubernetes controllers today. And Kubernetes distributors like Google and Red Hat are providing more and more custom resource types in their base Kubernetes distributions.

All of this isn’t to say that the Kubernetes API framework is perfect. Rather it’s to say that it doesn’t matter(much) because the Kubernetes model has become a de facto standard. Many developers understand it, many tools speak it, and many providers use it. Even with warts, Kubernetes’ broad adoption, user awareness and interoperability mostly outweigh other considerations.

With the spread of the Kubernetes resource model it’s already possible to describe an entire software-defined computing environment as a collection of Kubernetes resources. Like running a single artifact with docker run ..., distributed applications can be deployed and run with a simple kubectl apply -f .... And unlike the custom formats and tools offered by individual cloud service providers, the Kubernetes’ descriptors are much more likely to run in many different provider and datacenter environments, because they all implement the same APIs.

Kubernetes isn’t about containers after all. It’s about APIs.

Article link: https://joshgav.github.io/2021/12/16/kubernetes-isnt-about-containers.html

Quantum effects in superconductors could give semiconductor technology a new twist. Researchers at the Paul Scherrer Institute PSI and Cornell University in New York State have identified a composite material that could integrate quantum devices into semiconductor technology, making electronic components significantly more powerful. They publish their findings today in the journal Science Advances.

Our current electronic infrastructure is based primarily on semiconductors. This class of materials emerged around the middle of the 20th century and has been improving ever since. Currently, the most important challenges in semiconductor electronics include further improvements that would increase the bandwidth of data transmission, energy efficiency and information security. Exploiting quantum effects is likely to be a breakthrough.

Quantum effects that can occur in superconducting materials are particularly worthy of consideration. Superconductors are materials in which the electrical resistance disappears when they are cooled below a certain temperature. The fact that quantum effects in superconductors can be utilized has already been demonstrated in first quantum computers.

To find possible successors for today’s semiconductor electronics, some researchers—including a group at Cornell University—are investigating so-called heterojunctions, i.e. structures made of two different types of materials. More specifically, they are looking at layered systems of superconducting and semiconducting materials. “It has been known for some time that you have to select materials with very similar crystal structures for this, so that there is no tension in the crystal lattice at the contact surface,” explains John Wright, who produced the heterojunctions for the new study at Cornell University.

Two suitable materials in this respect are the superconductor niobium nitride (NbN) and the semiconductor gallium nitride (GaN). The latter already plays an important role in semiconductor electronics and is therefore well researched. Until now, however, it was unclear exactly how the electrons behave at the contact interface of these two materials—and whether it is possible that the electrons from the semiconductor interfere with the superconductivity and thus obliterate the quantum effects.

“When I came across the research of the group at Cornell, I knew: here at PSI we can find the answer to this fundamental question with our spectroscopic methods at the ADRESS beamline,” explains Vladimir Strocov, researcher at the Synchrotron Light Source SLS at PSI.

This is how the two groups came to collaborate. In their experiments, they eventually found that the electrons in both materials “keep to themselves.” No unwanted interaction that could potentially spoil the quantum effects takes place.

Synchrotron light reveals the electronic structures

The PSI researchers used a method well-established at the ADRESS beamline of the SLS: angle-resolved photoelectron spectroscopy using soft X-rays—or SX-ARPES for short. “With this method, we can visualize the collective motion of the electrons in the material,” explains Tianlun Yu, a postdoctoral researcher in Vladimir Strocov’s team, who carried out the measurements on the NbN/GaN heterostructure. Together with Wright, Yu is the first author of the new publication.

The SX-ARPES method provides a kind of map whose spatial coordinates show the energy of the electrons in one direction and something like their velocity in the other; more precisely, their momentum. “In this representation, the electronic states show up as bright bands in the map,” Yu explains. The crucial research result: at the material boundary between the niobium nitride NbN and the gallium nitride GaN, the respective “bands” are clearly separated from each other. This tells the researchers that the electrons remain in their original material and do not interact with the electrons in the neighboring material.

“The most important conclusion for us is that the superconductivity in the niobium nitride remains undisturbed, even if this is placed atom by atom to match a layer of gallium nitride,” says Vladimir Strocov. “With this, we were able to provide another piece of the puzzle that confirms: This layer system could actually lend itself to a new form of semiconductor electronics that embeds and exploits the quantum effects that happen in superconductors.”

Article link: https://techxplore-com.cdn.ampproject.org/c/s/techxplore.com/news/2021-12-semiconductors-quantum-world.amp

More information: Tianlun Yu et al, Momentum-resolved electronic band structure and offsets in an epitaxial NbN/GaN superconductor/semiconductor heterojunction, Science Advances (2021). DOI: 10.1126/sciadv.abi5833. www.science.org/doi/10.1126/sciadv.abi5833

Journal information: Science Advances

Provided by Paul Scherrer Institute

Over the past nearly 60 years, I have engaged with many leaders of governments, companies, and other organizations, and I have observed how our societies have developed and changed. I am happy to share some of my observations in case others may benefit from what I have learned.

Leaders, whatever field they work in, have a strong impact on people’s lives and on how the world develops. We should remember that we are visitors on this planet. We are here for 90 or 100 years at the most. During this time, we should work to leave the world a better place.

What might a better world look like? I believe the answer is straightforward: A better world is one where people are happier. Why? Because all human beings want to be happy, and no one wants to suffer. Our desire for happiness is something we all have in common.

But today, the world seems to be facing an emotional crisis. Rates of stress, anxiety, and depression are higher than ever. The gap between rich and poor and between CEOs and employees is at a historic high. And the focus on turning a profit often overrules a commitment to people, the environment, or society.

I consider our tendency to see each other in terms of “us” and “them” as stemming from ignorance of our interdependence. As participants in the same global economy, we depend on each other, while changes in the climate and the global environment affect us all. What’s more, as human beings, we are physically, mentally, and emotionally the same.

Look at bees. They have no constitution, police, or moral training, but they work together in order to survive. Though they may occasionally squabble, the colony survives on the basis of cooperation. Human beings, on the other hand, have constitutions, complex legal systems, and police forces; we have remarkable intelligence and a great capacity for love and affection. Yet, despite our many extraordinary qualities, we seem less able to cooperate.

In organizations, people work closely together every day. But despite working together, many feel lonely and stressed. Even though we are social animals, there is a lack of responsibility toward each other. We need to ask ourselves what’s going wrong.

I believe that our strong focus on material development and accumulating wealth has led us to neglect our basic human need for kindness and care. Reinstating a commitment to the oneness of humanity and altruism toward our brothers and sisters is fundamental for societies and organizations and their individuals to thrive in the long run. Every one of us has a responsibility to make this happen.

What can leaders do?

Be mindful

Cultivate peace of mind. As human beings, we have a remarkable intelligence that allows us to analyze and plan for the future. We have language that enables us to communicate what we have understood to others. Since destructive emotions like anger and attachment cloud our ability to use our intelligence clearly, we need to tackle them.

Fear and anxiety easily give way to anger and violence. The opposite of fear is trust, which, related to warmheartedness, boosts our self-confidence. Compassion also reduces fear, reflecting as it does a concern for others’ well-being. This, not money and power, is what really attracts friends. When we’re under the sway of anger or attachment, we’re limited in our ability to take a full and realistic view of the situation. When the mind is compassionate, it is calm and we’re able to use our sense of reason practically, realistically, and with determination.

Be selfless

We are naturally driven by self-interest; it’s necessary to survive. But we need wise self-interest that is generous and cooperative, taking others’ interests into account. Cooperation comes from friendship, friendship comes from trust, and trust comes from kindheartedness. Once you have a genuine sense of concern for others, there’s no room for cheating, bullying, or exploitation; instead, you can be honest, truthful, and transparent in your conduct.

Be compassionate

The ultimate source of a happy life is warmheartedness. Even animals display some sense of compassion. When it comes to human beings, compassion can be combined with intelligence. Through the application of reason, compassion can be extended to all 7 billion human beings. Destructive emotions are related to ignorance, while compassion is a constructive emotion related to intelligence. Consequently, it can be taught and learned.

The source of a happy life is within us. Troublemakers in many parts of the world are often quite well-educated, so it is not just education that we need. What we need is to pay attention to inner values.

The distinction between violence and nonviolence lies less in the nature of a particular action and more in the motivation behind the action. Actions motivated by anger and greed tend to be violent, whereas those motivated by compassion and concern for others are generally peaceful. We won’t bring about peace in the world merely by praying for it; we have to take steps to tackle the violence and corruption that disrupt peace. We can’t expect change if we don’t take action.

Peace also means being undisturbed, free from danger. It relates to our mental attitude and whether we have a calm mind. What is crucial to realize is that, ultimately, peace of mind is within us; it requires that we develop a warm heart and use our intelligence. People often don’t realize that warmheartedness, compassion, and love are actually factors for our survival.

Buddhist tradition describes three styles of compassionate leadership: the trailblazer, who leads from the front, takes risks, and sets an example; the ferryman, who accompanies those in his care and shapes the ups and downs of the crossing; and the shepherd, who sees every one of his flock into safety before himself. Three styles, three approaches, but what they have in common is an all-encompassing concern for the welfare of those they lead.

The Dalai Lama is the spiritual leader of the Tibetan People. He was awarded the Nobel Peace Prize in 1989 and the U.S. Congressional Gold Medal in 2007. Rasmus Hougaard is the founder and managing director of Potential Project, a global leadership and organizational development firm, and the coauthor of the new book, The Mind of the Leader: How to Lead Yourself, Your People, and Your Organization for Extraordinary Results. He has created an appthat will help you develop mindfulness, selflessness, and compassion in your leadership.

Edge computing is the practice of processing data physically closer to its source.

October 22, 2019

Smart cities. Remote surgeries. Fully autonomous vehicles. Voice-controlled home speakers. All of these innovative technologies are made possible thanks to edge computing. So …

What Is Edge Computing?

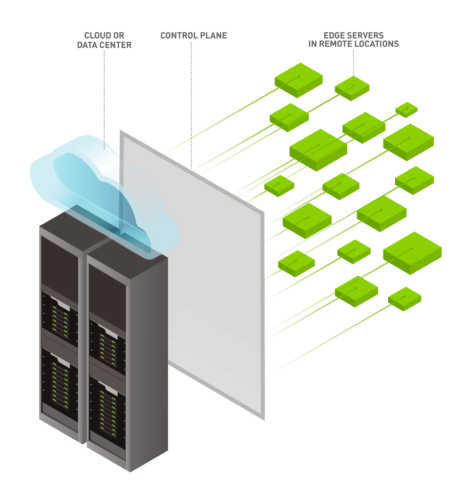

Edge computing is the practice of moving compute power physically closer to where data is generated, usually an IoT device or sensor. It is named for the way compute power is brought to the “edge” of a device or network. Edge computing is used to process data faster, increase bandwidth and ensure data sovereignty.

By processing data at a network’s edge, edge computing reduces the need for large amounts of data to travel between servers, the cloud, and devices or edge locations. This solves the infrastructure issues found in conventional data processing, such as latency and bandwidth. This is particularly important for modern applications such as data science and AI.

For example, advanced industrial equipment increasingly features intelligent sensors powered by AI-capable processors that can do inferencing at the edge, also known as edge AI. These sensors monitor equipment and nearby machinery to alert supervisors of any anomalies that potentially jeopardize safe, continuous, and effective operations. In this use case, having AI processors physically present at the industrial site results in lower latency and the industrial equipment reacting more quickly to their environment.

The always-on, instantaneous feedback that edge computing offers is especially critical for applications where human safety is a factory, such as self-driving cars where saving even milliseconds of data processing and response times can be key to avoiding accidents. Or in hospitals, where doctors rely on accurate, real-time data to treat their patients.

Edge computing can be used everywhere sensors collect data — from retail stores for self-checkout and hospitals for remote surgeries, to warehouses with intelligent supply-chain logistics and factories with quality control inspections.

How Does Edge Computing Work?

Edge computing works by processing data as close to its source or end user as possible. It keeps data, applications and computing power away from a centralized network or data center.

Traditionally, data produced by sensors is often either manually reviewed by humans, left unprocessed or sent to the cloud or a data center for processing, and then sent back to the device. Relying solely on manual reviews results in slower, less efficient processes. Cloud computing provides computing resources, however, data travel and processing puts a large strain on bandwidth and latency.

Bandwidth is the rate at which data is transferred over the internet. When data is sent to the cloud, it travels through a wide area network, which can be costly due to its global coverage and high bandwidth needs. When processing data at the edge, local area networks can be utilized, resulting in higher bandwidth at lower costs.

Latency is the delay in sending information from one point to the next. It’s reduced when processing at the edge because data produced by sensors and IoT devices no longer needs to send data to a centralized cloud to be processed. Even on the zippiest fiber-optic networks, data can’t travel faster than the speed of light.

By bringing computing to the edge, or closer to the source of data, latency is reduced and bandwidth is increased, resulting in faster insights and actions.

Edge computing can be run on one or multiple systems to close the distance between where data is collected and processed to reduce bottlenecks and accelerate applications. An ideal edge infrastructure also involves a centralized software platform that can remotely manage all edge systems in one interface.

Why Is Edge Computing Needed?

Today, three technology trends are converging and creating use cases that are requiring organizations to consider edge computing: IoT, AI and 5G.

IoT: With the proliferation of IoT devices came the explosion of big data that businesses started to generate. As organizations suddenly took advantage of collecting data from every aspect of their businesses, they realized that their applications weren’t built to handle such large volumes of data.

Additionally, they came to realize that the infrastructure for transferring, storing and processing large volumes of data can be extremely expensive and difficult to manage. That may be why only a fraction of data collected from IoT devices is ever processed, in some situations as low as 25 percent.

And the problem is compounding. There are 40 billion IoT devices today and predictions from Arm show that there could be 1 trillion IoT devices by 2022. As the number of IoT devices grows and the amount of data that needs to be transferred, stored and processed increases, organizations are shifting to edge computing to alleviate the costs required to use the same data in cloud computing models.

AI: Similar to IoT, AI represents endless possibilities and benefits for businesses, such as the ability to glean real-time insights. Just as quickly as organizations are finding new use cases for AI, they’re discovering that those new use cases have requirements that their current cloud infrastructure can’t fulfill.

When organizations have bandwidth and latency infrastructure constraints, they have to cut corners on the amount of data they feed their models. This results in weaker models.

5G: 5G networks, which are expected to clock in 10x faster than 4G ones, are built to allow each node to serve hundreds of devices, increasing the possibilities for AI-enabled services at edge locations.

WIth edge computing’s powerful, quick and reliable processing power, businesses have the potential to explore new business opportunities, gain real-time insights, increase operational efficiency and to improve their user experience.

What Are the Benefits of Edge Computing?

The shift to edge computing offers businesses new opportunities to glean insights from their large datasets. The main benefits of edge computing are:

- Lower Latency: By processing data at a network’s edge, data travel is reduced or eliminated, accelerating AI. This opens the door to advanced use cases with more complex AI models such as fully autonomous vehicles and augmented reality, which require low latency.

- Reduced Cost: Using a LAN for data processing means organizations can access higher bandwidth and storage at lower costs compared to cloud computing. Additionally, because processing happens at the edge, less data needs to be sent to the cloud or data center for further processing. There is also a decrease in the volume of data that needs to travel, which reduces costs further.

- Model Accuracy: AI relies on high-accuracy models, particularly for edge use cases that require instantaneous responses. When a network’s bandwidth is too low, it’s typically mitigated by reducing the size of data used for inferencing. This results in reduced image sizes, skipped frames in video and reduced sample rates in audio. When deployed at the edge, data feedback loops can be used to improve AI model accuracy and multiple models can be run simultaneously resulting in improved insights.

- Wider Reach: Internet access is required for traditional cloud computing. But edge computing processes data without internet access, extending its range to remote or previously inaccessible locations.

- Data Sovereignty: When data is processed at the location it is collected, edge computing allows organizations to keep all of their data and compute inside the LAN and company firewall. This results in reduced exposure to cybersecurity attacks in the cloud, and strict and ever-changing data laws.

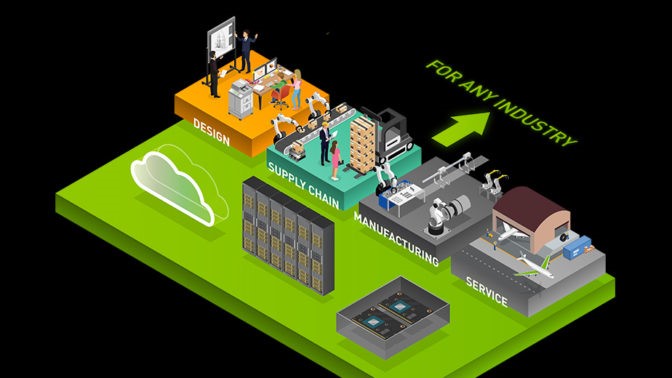

Edge Computing Use Cases Across Industries

Edge computing can bring real-time intelligence to businesses across industries, including retail, healthcare, manufacturing, hospitals and more.

Edge Computing for Retail

In the face of rapidly changing consumer demand, behavior and expectations, the world’s largest retailers enlist edge AI to deliver better experiences for customers.

With edge computing, retailers can boost their agility by:

- Reducing shrinkage: With in-store cameras and sensors leveraging AI at the edge to analyze data, stores can identify and prevent instances of errors, waste, damage and theft.

- Improving inventory management: Edge computing applications can use in-store cameras to alert store associates when shelf inventories are low, reducing the impact of stockouts.

- Streamlining shopping experiences: With edge computing’s fast data processing, retailers can implement voice ordering so shoppers can easily search for items, ask for product information and place online orders using smart speakers or other intelligent mobile devices.

Learn more about the three pillars of edge AI in retail.

Edge Computing for Smart Cities

Cities, school campuses, stadiums and shopping malls are a few examples of many places that have started to use AI at the edge to transform into smart spaces. These entities are using AI to make their spaces more operationally efficient, safe and accessible.

Edge computing has been used to transform operations and improve safetyaround the world in areas such as:

- Reducing traffic congestion: Nota uses computer vision to identify, analyze and optimize traffic. Cities use its offering to improve traffic flow, decrease traffic congestion-related costs and minimize the time drivers spend in traffic.

- Monitoring beach safety: Sightbit’s image-detection application helps spot dangers at beaches, such as rip currents and hazardous ocean conditions, allowing authorities to enact life-saving procedures.

- Increasing airline and airport operation efficiency: ASSAIA created an AI-enabled video analytics application to help airlines and airports make better and quicker decisions around capacity, sustainability and safety.

Download this ebook for more information on how to build smarter, safer spaces with AI.

Edge Computing for Automakers and Manufacturers

Factories, manufacturers and automakers are generating sensor data that can be used in a cross-referenced fashion to improve services.

Some popular use cases for promoting efficiency and productivity in manufacturing include:

- Predictive maintenance: Detecting anomalies early and predicting when machines will fail to avoid downtime.

- Quality control: Detecting defects in products and alerting staff instantly to reduce waste and improve manufacturing efficiency.

- Worker safety: Using a network of cameras and sensors equipped with AI-enabled video analytics to allow manufacturers to identify workers in unsafe conditions and to quickly intervene to prevent accidents.

Edge Computing for Healthcare

The combination of edge computing and AI is reshaping healthcare. Edge AI provides healthcare workers the tools they need to improve operational efficiency, ensure safety, and provide the highest-quality care experience possible.

Two popular examples of AI-powered edge computing within the healthcare sector are:

- Operating rooms: AI models built on streaming images and sensors in medical devices are helping with image acquisition and reconstruction, workflow optimizations for diagnosis and therapy planning, measurements of organs and tumors, surgical therapy guidance, and real-time visualization and monitoring during surgeries.

- Hospitals: Smart hospitals are using technologies such as patient monitoring, patient screening, conversational AI, heart rate estimation, radiology scanners and more. Human pose estimation is a popular computer vision task that estimates key points on a person’s body such as eyes, arms and legs. It can be used to help notify staff when a patient moves or falls out of a hospital bed.

View this video to see how hospitals use edge AI to improve care for patients.

NVIDIA at the Edge

The ability to glean faster insights can mean saving time, costs and even lives. That’s why enterprises are tapping into the data generated from the billions of IoT sensors found in retail stores, on city streets and in hospitals to create smart spaces.

But to do this, organizations need edge computing systems that deliver powerful, distributed compute, secure and simple remote management, and compatibility with industry-leading technologies.

NVIDIA brings together NVIDIA-Certified Systems, embedded platforms, AI software and management services that allow enterprises to quickly harness the power of AI at the edge.

Mainstream Servers for Edge AI: NVIDIA GPUs and BlueField data processing units (DPUs) provide a host of software-defined hardware engines for accelerated networking and security. These hardware engines allow for best-in-class performance, with all necessary levels of enterprise data privacy, integrity and reliability built in. NVIDIA-Certified Systems ensure that a server is optimally designed for running modern applications in an enterprise.

Management Solutions for Edge AI:NVIDIA Fleet Command is a cloud service that securely deploys, manages and scales AI applications across distributed edge infrastructure. Purpose-built for AI lifecycle management, Fleet Command offers streamlined deployments, layered security and detailed monitoring capabilities.

For organizations looking to build their own management solution, there is the NVIDIA GPU Operator. It uses the Kubernetes operator framework to automate the management of all NVIDIA software components needed to provision GPUs. These components include NVIDIA drivers to enable CUDA, a Kubernetes device plugin for GPUs, the NVIDIA container runtime, automatic node labeling and an NVIDIA Data Center GPU Manager-based monitoring agent.

Applications for Edge AI: To complement these offerings, NVIDIA has also worked with partners to create a whole ecosystem of software development kits, applications and industry frameworks in all areas of accelerated computing. This software is available to be remotely deployed and managed using the NVIDIA NGC software hub. AI and IT teams can get easy access to a wide variety of pretrained AI models and Kubernetes-ready Helm charts to implement into their edge AI systems.

The Future of Edge Computing

According to market research firm IDC’s “Future of Operations-Edge and IoT webinar,” the edge computing market will be worth $251 billion by 2025, and is expected to continue growing each year with a compounded annual growth rate of 16.4 percent.

The evolution of AI, IoT and 5G will continue to catalyze the adoption of edge computing. The number of use cases and the types of workloads deployed at the edge will grow. Today, the most prevalent edge use cases revolve around computer vision. However, there are lots of untapped opportunities in workload areas such as natural language processing, recommender systems and robotics.

The possibilities at the edge are truly limitless.

Article link: https://blogs.nvidia.com/blog/2019/10/22/what-is-edge-computing/?

The Insidious Cyberthreat

When sounding the alarm over cyberthreats, policymakers and analysts have typically employed a vocabulary of conflict and catastrophe. As early as 2001, James Adams, a co-founder of the cybersecurity firm iDefense, warned in these pages that cyberspace was “a new international battlefield,” where future military campaigns would be won or lost. In subsequent years, U.S. defense officials warned of a “cyber–Pearl Harbor,” in the words of then Defense Secretary Leon Panetta, and a “cyber 9/11,” according to then Homeland Security Secretary Janet Napolitano. In 2015, James Clapper, then the director of national intelligence, said the United States must prepare for a “cyber Armageddon” but acknowledged it was not the most likely scenario. In response to the threat, officials argued that cyberspace should be understood as a “domain” of conflict, with “key terrain” that the United States needed to take or defend.

The 20 years since Adams’s warning have revealed that cyberthreats and cyberattacks are hugely consequential—but not in the way most predictions suggested. Spying and theft in cyberspace have garnered peta-, exa-, even zettabytes of sensitive and proprietary data. Cyber-enabled information operations have threatened elections and incited mass social movements. Cyberattacks on businesses have cost hundreds of billions of dollars. But while the cyberthreat is real and growing, expectations that cyberattacks would create large-scale physical effects akin to those caused by surprise bombings on U.S. soil, or that they would hurtle states into violent conflict, or even that what happened in the domain of cyberspace would define who won or lost on the battlefield haven’t been borne out. In trying to analogize the cyberthreat to the world of physical warfare, policymakers missed the far more insidious danger that cyber-operations pose: how they erode the trust people place in markets, governments, and even national power.

Correctly diagnosing the threat is essential, in part because it shapes how states invest in cybersecurity. Focusing on single, potentially catastrophic events, and thinking mostly about the possible physical effects of cyberattacks, unduly prioritizes capabilities that will protect against “the big one”: large-scale responses to disastrous cyberattacks, offensive measures that produce physical violence, or punishments only for the kinds of attacks that cross a strategic threshold. Such capabilities and responses are mostly ineffective at protecting against the way cyberattacks undermine the trust that undergirds modern economies, societies, governments, and militaries.

If trust is what’s at stake—and it has already been deeply eroded—then the steps states must take to survive and operate in this new world are different. The solution to a “cyber–Pearl Harbor” is to do everything possible to ensure it doesn’t happen, but the way to retain trust in a digital world despite the inevitability of cyberattacks is to build resilience and thereby promote confidence in today’s systems of commerce, governance, military power, and international cooperation. States can develop this resilience by restoring links between humans and within networks, by strategically distributing analog systems where needed, and by investing in processes that allow for manual and human intervention. The key to success in cyberspace over the long term is not finding a way to defeat all cyberattacks but learning how to survive despite the disruption and destruction they cause.

The United States has not so far experienced a “cyber 9/11,” and a cyberattack that causes immediate catastrophic physical effects isn’t likely in the future, either. But Americans’ trust in their government, their institutions, and even their fellow citizens is declining rapidly—weakening the very foundations of society. Cyberattacks prey on these weak points, sowing distrust in information, creating confusion and anxiety, and exacerbating hatred and misinformation. As people’s digital dependencies grow and the links among technologies, people, and institutions become more tenuous, this cyberthreat to trust will only become more existential. It is this creeping dystopian future that policymakers should worry about—and do everything possible to avert.

THE TIES THAT BIND

Trust, defined as “the firm belief in the reliability, truth, ability, or strength of someone or something,” plays a central role in economies, societies, and the international system. It allows individuals, organizations, and states to delegate tasks or responsibilities, thereby freeing up time and resources to accomplish other jobs, or to cooperate instead of acting alone. It is the glue that allows complex relationships to survive—permitting markets to become more complex, governance to extend over a broader population or set of issues, and states to trade, cooperate, and exist within more complicated alliance relationships. “Extensions of trust . . . enable coordination of actions over large domains of space and time, which in turn permits the benefits of more complex, differentiated, and diverse societies,” explains the political scientist Mark Warren.

Those extensions of trust have played an essential role in human progress across all dimensions. Primitive, isolated, and autocratic societies function with what sociologists call “particularized trust”—a trust of only known others. Modern and interconnected states require what’s called “generalized trust,” which extends beyond known circles and allows actors to delegate trust relationships to individuals, organizations, and processes with whom the truster is not intimately familiar. Particularized trust leads to allegiance within small groups, distrust of others, and wariness of unfamiliar processes or institutions; generalized trust enables complicated market interactions, community involvement, and trade and cooperation among states.

The modern market, for example, could not exist without the trust that allows for the delegation of responsibility to another entity. People trust that currencies have value, that banks can secure and safeguard assets, and that IOUs in the form of checks, credit cards, or loans will be fulfilled. When individuals and entities have trust in a financial system, wages, profits, and employment increase. Trust in laws about property rights facilitates trade and economic prosperity. The digital economy makes this generalized trust even more important. No longer do people deposit gold in a bank vault. Instead, modern economies consist of complicated sets of digital transactions in which users must trust not only that banks are securing and safeguarding their assets but also that the digital medium—a series of ones and zeros linked together in code—translates to an actual value that can be used to buy goods and services.

The digitally dependent economy is particularly vulnerable to degradations of trust.

Trust is a basic ingredient of social capital—the shared norms and interconnected networks that, as the political scientist Robert Putnam has famously argued, lead to more peaceful and prosperous communities. The generalized trust at the heart of social capital allows voters to delegate responsibility to proxies and institutions to represent their interests. Voters must trust that a representative will promote their interests, that votes will be logged and counted properly, and that the institutions that write and uphold laws will do so fairly.

Finally, trust is at the heart of how states generate national power and, ultimately, how they interact within the international system. It allows civilian heads of state to delegate command of armed forces to military leaders and enables those military leaders to execute decentralized control of lower-level military operations and tactics. States characterized by civil-military distrust are less likely to win wars, partly because of how trust affects a regime’s willingness to give control to lower levels of military units in warfare. For example, the political scientist Caitlin Talmadge notes how Saddam Hussein’s efforts to coup-proof his military through the frequent cycling of officers through assignments, the restriction of foreign travel and training, and perverse regime loyalty promotion incentives handicapped the otherwise well-equipped Iraqi military. Trust also enables militaries to experiment and train with new technologies, making them more likely to innovate and develop revolutionary advancements in military power.

Trust also dictates the stability of the international system. States rely on it to build trade and arms control agreements and, most important, to feel confident that other states will not launch a surprise attack or invasion. It enables international cooperation and thwarts arms races by creating the conditions to share information—thus defeating the suboptimal outcome of a prisoner’s dilemma, wherein states choose conflict because they are unable to share the information required for cooperation. The Russian proverb “Doveryai, no proveryai”—“Trust, but verify”—has guided arms control negotiations and agreements since the Cold War.

In short, the world today is more dependent on trust than ever before. This is, in large part, because of the way information and digital technologies have proliferated across modern economies, societies, governments, and militaries, their virtual nature amplifying the role that trust plays in daily activities. This occurs in a few ways. First, the rise of automation and autonomous technologies—whether in traffic systems, financial markets, health care, or military weapons—necessitates a delegation of trust whereby the user is trusting that the machine can accomplish a task safely and appropriately. Second, digital information requires the user to trust that data are stored in the right place, that their values are what the user believes them to be, and that the data won’t be manipulated. Additionally, digital social media platforms create new trust dynamics around identity, privacy, and validity. How do you trust the creators of information or that your social interactions are with an actual person? How do you trust that the information you provide others will be kept private? These are relatively complex relationships with trust, all the result of users’ dependence on digital technologies and information in the modern world.

SUSPICION SPREADS

All the trust that is needed to carry out these online interactions and exchanges creates an enormous target. In the most dramatic way, cyber-operations generate distrust in how or whether a system operates. For instance, an exploit, which is a cyberattack that takes advantage of a security flaw in a computer system, can hack and control a pacemaker, causing distrust on the part of the patient using the device. Or a microchip backdoor can allow bad actors to access smart weapons, sowing distrust about who is in control of those weapons. Cyber-operations can lead to distrust in the integrity of data or the algorithms that make sense of data. Are voter logs accurate? Is that artificial-intelligence-enabled strategic warning system showing a real missile launch, or is it a blip in the computer code? Additionally, operating in a digital world can produce distrust in ownership or control of information: Are your photos private? Is your company’s intellectual property secure? Did government secrets about nuclear weapons make it into an adversary’s hands? Finally, cyber-operations create distrust by manipulating social networks and relationships and ultimately deteriorating social capital. Online personas, bots, and disinformation campaigns all complicate whether individuals can trust both information and one another. All these cyberthreats have implications that can erode the foundations on which markets, societies, governments, and the international system were built.

The digitally dependent economy is particularly vulnerable to degradations of trust. As the modern market has become more interconnected online, cyberthreats have grown more sophisticated and ubiquitous. Yearly estimates of the total economic cost of cyberattacks range from hundreds of billions to trillions of dollars. But it isn’t the financial cost of these attacks alone that threatens the modern economy. Instead, it is how these persistent attacks create distrust in the integrity of the system as a whole.

Nowhere was this more evident than in the public’s response to the ransomware attack on the American oil provider Colonial Pipeline. In May 2021, a criminal gang known as DarkSide shut down the pipeline, which provides about 45 percent of the fuel to the East Coast of the United States, and demanded a ransom, which the company ultimately paid. Despite the limited impact of the attack on the company’s ability to provide oil to its customers, people panicked and flocked to gas stations with oil tanks and plastic bags to stock up on gas, leading to an artificial shortage at the pump. This kind of distrust, and the chaos it causes, threatens the foundations not just of the digital economy but also of the entire economy.

The inability to safeguard intellectual property from cybertheft is similarly consequential. The practice of stealing intellectual property or trade secrets by hacking into a company’s network and taking sensitive data has become a lucrative criminal enterprise—one that states including China and North Korea use to catch up with the United States and other countries that have the most innovative technology. North Korea famously hacked the pharmaceutical company Pfizer in an attempt to steal its COVID-19 vaccine technology, and Chinese exfiltrations of U.S. defense industrial base research has led to copycat technological advances in aircraft and missile development. The more extensive and sophisticated such attacks become, the less companies can trust that their investments in research and development will lead to profit—ultimately destroying knowledge-based economies. And nowhere are the threats to trust more existential than in online banking. If users no longer trust that their digital data and their money can be safeguarded, then the entire complicated modern financial system could collapse. Perversely, the turn toward cryptocurrencies, most of which are not backed by government guarantees, makes trust in the value of digital information all the more critical.

Societies and governments are also vulnerable to attacks on trust. Schools, courts, and municipal governments have all become ransomware targets—whereby systems are taken offline or rendered useless until the victim pays up. In the cross hairs are virtual classrooms, access to judicial records, and local emergency services. And while the immediate impact of these attacks can temporarily degrade some governance and social functions, the greater danger is that over the long term, a lack of faith in the integrity of data stored by governments—whether marriage records, birth certificates, criminal records, or property divisions—can erode trust in the basic functions of a society. Democracy’s reliance on information and social capital to build trust in institutions has proved remarkably vulnerable to cyber-enabled information operations. State-sponsored campaigns that provoke questions about the integrity of governance data (such as vote tallies) or that fracture communities into small groups of particularized trust give rise to the kind of forces that foment civil unrest and threaten democracy.

Cyber-operations can also jeopardize military power, by attacking trust in modern weapons. With the rise of digital capabilities, starting with the microprocessor, states began to rely on smart weapons, networked sensors, and autonomous platforms for their militaries. As those militaries became more digitally capable, they also became susceptible to cyber-operations that threatened the reliability and functionality of these smart weapons systems. Whereas a previous focus on cyberthreats fixated on how cyber-operations could act like a bomb, the true danger occurs when cyberattacks make it difficult to trust that actual bombs will work as expected. As militaries move farther away from the battlefield through remote operations and commanders delegate responsibility to autonomous systems, this trust becomes all the more important. Can militaries have faith that cyberattacks on autonomous systems will not render them ineffective or, worse, cause fratricide or kill civilians? Furthermore, for highly networked militaries (such as that of the United States), lessons taken from the early information age led to doctrines, campaigns, and weapons that rely on complex distributions of information. Absent trust in information or the means by which it is being disseminated, militaries will be stymied—awaiting new orders, unsure of how to proceed.

Together, these factors threaten the fragile systems of trust that facilitate peace and stability within the international system. They make trade less likely, arms control more difficult, and states more uncertain about one another’s intentions. The introduction of cybertools for spying, attacks, and theft has only exacerbated the effects of distrust. Offensive cyber-capabilities are difficult to monitor, and the lack of norms about the appropriate uses of cyber-operations makes it difficult for states to trust that others will use restraint. Are Russian hackers exploring U.S. power networks to launch an imminent cyberattack, or are they merely probing for vulnerabilities, with no future plans to use them? Are U.S. “defend forward” cyber-operations truly to prevent attacks on U.S. networks or instead a guise to justify offensive cyberattacks on Chinese or Russian command-and-control systems? Meanwhile, the use of mercenaries, intermediaries, and gray-zone operations in cyberspace makes attribution and determining intent harder, further threatening trust and cooperation in the international system. For example, Israeli spyware aiding Saudi government efforts to repress dissent, off-duty Chinese military hacktivists, criminal organizations the Russian state allows but does not officially sponsor—all make it difficult to establish a clear chain of attribution for an intentional state action. Such intermediaries also threaten the usefulness of official agreements among states about what is appropriate behavior in cyberspace.

LIVING WITH FAILURE

To date, U.S. solutions to dangers in cyberspace have focused on the cyberspace part of the question—deterring, defending against, and defeating cyberthreats as they attack their targets. But these cyber-focused strategies have struggled and even failed: cyberattacks are on the rise, the efficacy of deterrence is questionable, and offensive approaches cannot stem the tide of small-scale attacks that threaten the world’s modern, digital foundations. Massive exploits—such as the recent hacks of SolarWinds’ network management software and Microsoft Exchange Server’s email software—are less a failure of U.S. cyberdefenses than a symptom of how the targeted systems were conceived and constructed in the first place. The goal should be not to stop all cyber-intrusions but to build systems that are able to withstand incoming attacks. This is not a new lesson. When cannons and gunpowder debuted in Europe in the fourteenth and fifteenth centuries, cities struggled to survive the onslaught of the new firepower. So states adapted their fortifications—dug ditches, built bastions, organized cavaliers, constructed extensive polygonal edifices—all with the idea of creating cities that could survive a siege, not stop the cannon fire from ever occurring. The best fortifications were designed to enable active defense, wearing the attackers down until a counterattack could defeat the forces remaining outside the city.

The fortification analogy invites an alternative cyberstrategy in which the focus is on the system itself—whether that’s a smart weapon, an electric grid, or the mind of an American voter. How does one build systems that can continue to operate in a world of degraded trust? Here, network theory—the study of how networks succeed, fail, and survive—offers guidance. Studies on network robustness find that the strongest networks are those with a high density of small nodes and multiple pathways between nodes. Highly resilient networks can withstand the removal of multiple nodes and linkages without decomposing, whereas less resilient, centralized networks, with few pathways and sparser nodes, have a much lower critical threshold for degradation and failure. If economies, societies, governments, and the international system are going to survive serious erosions of trust, they will need more bonds and links, fewer dependencies on central nodes, and new ways to reconstitute network components even as they are under attack. Together, these qualities will lead to generalized trust in the integrity of the systems. How can states build such networks?

If users no longer trust that their data and money can be safeguarded, then the modern financial system could collapse.

First, at the technical level, networks and data structures that undergird the economy, critical infrastructure, and military power must prioritize resilience. This requires decentralized and dense networks, hybrid cloud structures, redundant applications, and backup processes. It implies planning and training for network failure so that individuals can adapt and continue to provide services even in the midst of an offensive cyber-campaign. It means relying on physical backups for the most important data (such as votes) and manual options for operating systems when digital capabilities are unavailable. For some highly sensitive systems (for instance, nuclear command and control), it may be that analog options, even when less efficient, produce remarkable resilience. Users need to trust that digital capabilities and networks have been designed to gracefully degrade, as opposed to catastrophically fail: the distinction between binary trust (that is, trusting the system will work perfectly or not trusting the system at all) and a continuum of trust (trusting the system to function at some percentage between zero and 100 percent) should drive the design of digital capabilities and networks. These design choices will not only increase users’ trust but also decrease the incentives for criminal and state-based actors to launch cyberattacks.

Making critical infrastructure and military power more resilient to cyberattacks would have positive effects on international stability. More resilient infrastructure and populations are less susceptible to systemic and long-lasting effects from cyberattacks because they can bounce back quickly. This resilience, in turn, decreases the incentives for states to preemptively strike an adversary online, since they would question the efficacy of their cyberattacks and their ability to coerce the target population. Faced with a difficult, costly, and potentially ineffective attack, aggressors are less likely to see the benefits of chancing the cyberattack in the first place. Furthermore, states that focus on building resilience and perseverance in their digitally enabled military forces are less likely to double down on first-strike or offensive operations, such as long-range missile strikes or campaigns of preemption. The security dilemma—when states that would otherwise not go to war with each other find themselves in conflict because they are uncertain about each other’s intentions—suggests that when states focus more on defense than offense, they are less likely to spiral into conflicts caused by distrust and uncertainty.

HUMAN RESOURCES

Solving the technical side, however, is only part of the solution. The most important trust relationships that cyberspace threatens are society’s human networks—that is, the bonds and links that people have as individuals, neighbors, and citizens so that they can work together to solve problems. Solutions for making these human networks more durable are even more complicated and difficult than any technical fixes. Cyber-enabled information operations target the links that build trust between people and communities. They undermine these broader connections by creating incentives to form clustered networks of particularized trust—for example, social media platforms that organize groups of like-minded individuals or disinformation campaigns that promote in-group and out-group divisions. Algorithms and clickbait designed to promote outrage only galvanize these divisions and decrease trust of those outside the group.

Governments can try to regulate these forces on social media, but those virtual enclaves reflect actual divisions within society. And there’s a feedback loop: the distrust that is building online leaks out into the real world, separating people further into groups of “us” and “them.” Combating this requires education and civic engagement—the bowling leagues that Putnam said were necessary to rebuild Americans’ social capital (Putnam’s book Bowling Alone, coincidentally, came out in 2000, just as the Internet was beginning to take off). After two years of a global pandemic and a further splintering of Americans into virtual enclaves, it is time to reenergize physical communities, time for neighborhoods, school districts, and towns to come together to rebuild the links and bonds that were severed to save lives during the pandemic. The fact is that these divisions were festering in American communities even before the pandemic or the Internet accelerated their consolidation and amplified their power. The solution, therefore, the way to do this kind of rebuilding, will not come from social media, the CEOs of those platforms, or digital tools. Instead, it will take courageous local leaders who can rebuild trust from the ground up, finding ways to bring together communities that have been driven apart. It will take more frequent disconnecting from the Internet, and from the synthetic groups of particularized trust that were formed there, in order to reconnect in person. Civic education could help by reminding communities of their commonalities and shared goals and by creating critical thinkers who can work for change within democratic institutions.

BOWLING TOGETHER

There’s a saying that cyber-operations lead to death by a thousand cuts, but perhaps a better analogy is termites, hidden in the recesses of foundations, that gradually eat away at the very structures designed to support people’s lives. The previous strategic focus on one-off, large-scale cyber-operations led to bigger and better cyber-capabilities, but it never addressed the fragility within the foundations and networks themselves.

Will cyberattacks ever cause the kind of serious physical effects that were feared over the last two decades? Will a strategy focused more on trust and resilience leave states uniquely vulnerable to this? It is of course impossible to say that no cyberattack will ever produce large-scale physical effects similar to those that resulted from the bombing of Pearl Harbor. But it is unlikely—because the nature of cyberspace, its virtual, transient, and ever-changing character, makes it difficult for attacks on it to create lasting physical effects. Strategies that focus on trust and resilience by investing in networks and relationships make these kinds of attacks yet more difficult. Therefore, focusing on building networks that can survive incessant, smaller attacks has a fortuitous byproduct: additional resilience against one-off, large-scale attacks. But this isn’t easy, and there is a significant tradeoff in both efficiency and cost for strategies that focus on resilience, redundancy, and perseverance over convenience or deterring and defeating cyberthreats. And the initial cost of these measures to foster trust falls disproportionately on democracies, which must cultivate generalized trust, as opposed to the particularized trust that autocracies rely on for power. This can seem like a tough pill to swallow, especially as China and the United States appear to be racing toward an increasingly competitive relationship.

Despite the difficulties and the cost, democracies and modern economies (such as the United States) must prioritize building trust in the systems that make societies run—whether that’s the electric grid, banks, schools, voting machines, or the media. That means creating backup plans and fail-safes, making strategic decisions about what should be online or digital and what needs to stay analog or physical, and building networks—both online and in society—that can survive even when one node is attacked. If a stolen password can still take out an oil pipeline or a fake social media account can continue to sway the political opinions of thousands of voters, then cyberattacks will remain too lucrative for autocracies and criminal actors to resist. Failing to build in more resilience—both technical and human—will mean that the cycle of cyberattacks and the distrust they give rise to will continue to threaten the foundations of democratic society.

Article link: https://www-foreignaffairs-com.cdn.ampproject.org/c/s/www.foreignaffairs.com/articles/world/2021-12-14/world-without-trust?amp

by Marshall W. Van Alstyne and Geoffrey G. Parker

December 17, 2021

Since at least the 1980s, firms have engaged in digital transformations by coordinating, automating, and outsourcing productive activity. Client server architectures replaced mainframes, remaking supply chains and fostering decentralization. Enterprise Resource Planning (ERP) and Customer Relationship Management (CRM) systems automated back office and front office processes. Shifts to cloud and SaaS have changed software evolution and the economics of renting versus owning. Machine learning and artificial intelligence uncover patterns that drive new products and services. During the Covid-19 pandemic, virtual interactions replaced physical interactions out of sheer necessity.

Some of these changes were as straightforward as converting processes from analog to digital. In other cases, companies changed how they worked or what they did.

Yet, amidst all this transformation, something novel — and perhaps fundamental — has changed: where and how companies create value has shifted. More and more, value creation comes from outside the firm not inside, and from external partners rather than internal employees. We call this new production model an “inverted firm,” a change in organizational structure that affects not only the technology but also the managerial governance that attends it.

The most obvious examples of this trend are the platform firms Google, Apple, Facebook, Amazon, and Microsoft. They have managed to achieve scale economies in revenues per employee that would put the hyperscalers of the 19th and early 20th centuries to shame. Facebook and Google do not author the posts or web pages they deliver. Apple, Microsoft, and Google do not write the vast majority of apps in their ecosystems. Alibaba and Amazon never purchase or make an even vaster number of the items they sell. Smaller firms, modeled on platforms, show this same pattern. Sampling from the Forbes Global 2000, platform firms compared to industry controls had much higher market values ($21,726 M vs. $8,243 M), much higher margins (21% vs. 12%), but only half the employees (9,872 vs. 19,000).

In the past, high revenues per employee gave evidence of highly automated or capital intensive operations such as refining, oil exploration, and chip making. Indeed, automation allowed Vodafone to reduce headcount for managing 3 million invoices per year from over 1,000 fulltime employees to only 400. But this time transformation is different. Inverted firms are achieving far higher market capitalization per employee not by automating or by shifting labor to capital but by coordinating external value creation.

The highest value digital transformation comes from firm inversion, that is, moving from value the firm alone creates to value it helps orchestrate. Cultivating a successful platform means providing the tools and the market to help partners grow. By contrast, incumbents typically use digital transformation to improve the efficiency of their current operations. New revenue projections typically focus on value capture. Of course, digital transformation can and should support operating efficiency, and this often comes first, but it cannot stop there. Digital investments must set the firm up to partner with users, developers, and merchants, at scale, with a focus on value creation, which is the foundation of firm inversion. Unconstrained by the resources the firm alone controls, inverted firms harness and orchestrate resources that others control.

How Inverted Firms Create Value

The most compelling evidence in favor of digital transformation as firm inversion comes from a recent study of 179 firms that adopted application programming interfaces (APIs). As interface technology, APIs allow firms to modularize their systems to facilitate replacement and upgrades. APIs also serve as “permissioning” technology that grants outsiders carefully metered access to internal resources. These functions not only allow a firm to quickly reconfigure systems in response to problems and opportunities but also allow outsiders to build on top of the firm’s digital real estate. Researchers (including one of the authors) classified firms based on whether API adopters used them for internal capital adjustment, the upgrades and opportunities the firm pursued itself, or used APIs for externally facing platform business models that allow developers and other partners to create their own upgrades and opportunities.

The difference in results between these two approaches is striking. Measured in terms of increased market capitalization, gains for firms that took the internal efficiency route were inconclusive. By contrast, firms that took the external platform route, becoming inverted firms, grew an average of 38% over sixteen years. Digital transformation of the latter type drove huge increases in value.

Inverted firms rely heavily on engagement from their external contributors. This strategy relies on partners the firm does not know volunteering ideas the firm does not have — a very different process than outsourcing, where the firm knows what it wants and contracts with the best known supplier. For firm inversion to work, others must join the ecosystem, otherwise it’s about as useful as hosting a potluck where nobody comes. Good management is what gets you RSVPs and guests creating good things to share. How new guests are rewarded, what resources they are given, and the willingness of the firm to help create that value can determine whether previously unknown partners choose to add value. This requires a different managerial mindset, from controlling to enabling, and from capturing to rewarding. The more that a firm can coax partners to volunteer investments, ideas, and effort, the more this external ecosystem thrives.

To attract partners, these inverted firms follow one simple rule: “Create more value than you take.” A little reflection shows the rule’s potency. People happily volunteer investments in time, ideas, resources, and market expansion when they get value in return. Partners flock to a firm that makes them more valuable, which in turn helps the firm’s ecosystem flourish. By contrast, a firm that takes more value than it creates drives people away. Why should they cook in a kitchen where the head chef keeps all the sales or build on digital real estate where the landlord takes all the rent? Such ecosystems wither.

Good platform husbandry means taking no more than 30% of the value and it can be far less. Too many product firms start from the bad habit of asking “How do we make money” when instead they should start by asking “How do we create value?” and “How do we help others create value?” Only by creating value is one entitled to make money.

The New Rules of Creating Value

Firm value used to be tied to tangible assets but that is no longer the case. IP valuation firm Oceantomo has documented a 30 year trend of shifting firm values from tangible to intangible assets. As of their 2020 accounting, intangible assets made up 90% of the valuation of S&P 500 firms. Of course, intangible assets cover a broad range of things including the value of brand, intellectual property, and goodwill. Those assets, however, have been known long before the 1980s.

Among inverted firms, the network effects that arise when partners create value for one another are a major source of growth in intangible assets. Adding the ability to coordinate value creation and exchange — from user to user, partner to partner, and partner to user — is one way that traditional firms transform. It also provides means to scale. Transforming atoms to bits improves margins and reach. Transforming from inside to outside magnifies ideas and resources.

To be sure, firm inversion entails risks of outside interference and partner negligence. If partners are part of the value proposition, then a brand can suffer when that proposition fails. One coauthor’s family member rented a host’s home on Airbnb only to discover it had no shower or bathroom, a fact carefully omitted from the service description. Airbnb quickly stepped in to discipline the host and provide the renter with a nicer no cost accommodation. Relying on third party producers entails also having strong quality curation of partner offerings and a rapid ability to swap in one’s own or a different partner’s offering. Furthermore, those that expose their data and systems to outsiders can face increased risk of cyberattack. This means taking responsibility and being a good steward of others’ data. Getting data means giving value back and protecting those who share it. On balance, those firms that understand and mitigate these risks significantly outperform the firms that stay closed and avoid the upside while avoiding the downside.

Creating the inverted firm has a number of important implications. Most important is that there are likely multiple holes in an organization’s skill set regarding orchestration of third party value. Adopting digital technology alone will not transform an internal organizational structure to one that functions externally. Executives must understand and undertake partner relationship management, partner data management, partner product management, platform governance, and platform strategy. They must learn how to motivate people they don’t know to share ideas they don’t have. Firms as diverse as Barclays Bank, Nike, John Deere, Ambev, Siemens, and Albertsons have listed 200,000 job openings for these platform functions and to run their increasingly inverted firms. Indeed, firms that look only inward will be the ones that fail to move upward.

Article link: https://hbr-org.cdn.ampproject.org/c/s/hbr.org/amp/2021/12/digital-transformation-changes-how-companies-create-value

Marshall W. Van Alstyne (mva@bu.edu) is the Questrom Chaired Professor at Boston University School of Business. His work has more than 10,000 citations. He co-authored Platform Revolution (W.W. Norton & Company, 2016), the April 2016 HBR article “Pipelines, Platforms, and the New Rules of Strategy” and the October 2006 HBR article “Strategies for Two-Sided Markets,” an HBR all time top 50. Follow him on Twitter @InfoEcon.

Geoffrey G. Parker(gparker@dartmouth.edu) is a professor of engineering at Dartmouth College and a research fellow at MIT’s Initiative on the Digital Economy. He co-authored Platform Revolution (W.W. Norton & Company, 2016), the April 2016 HBR article “Pipelines, Platforms, and the New Rules of Strategy”and the October 2006 HBR article “Strategies for Two-Sided Markets,” an HBR all time top 50. Follow him on Twitter @g2parker.