Cathy Li

Head, AI, Data and Metaverse; Member of the Executive Committee, World Economic Forum

- This round-up brings you key digital technology stories from the past fortnight.

- Top headlines: UN’s call to big tech companies over product harm; Nvidia briefly becomes most expensive company in the world; McDonald’s to end test run of AI chatbots at drive-thrus.

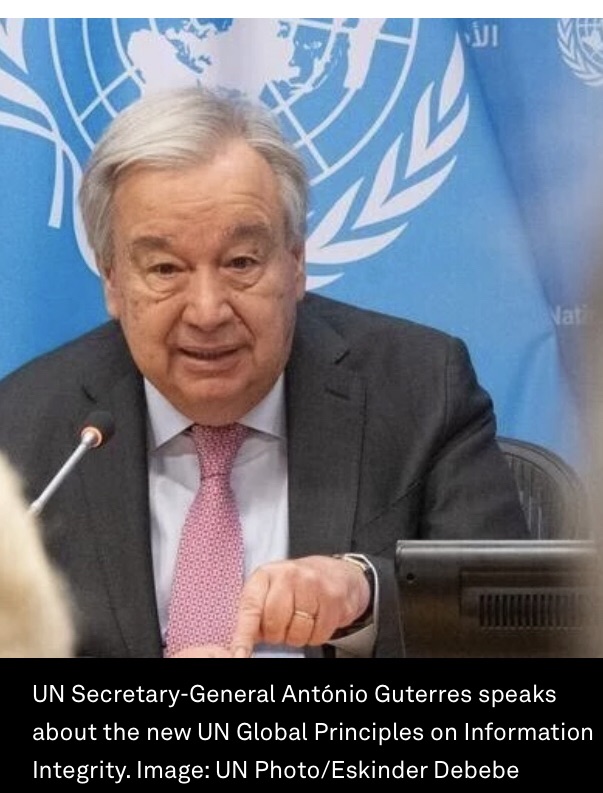

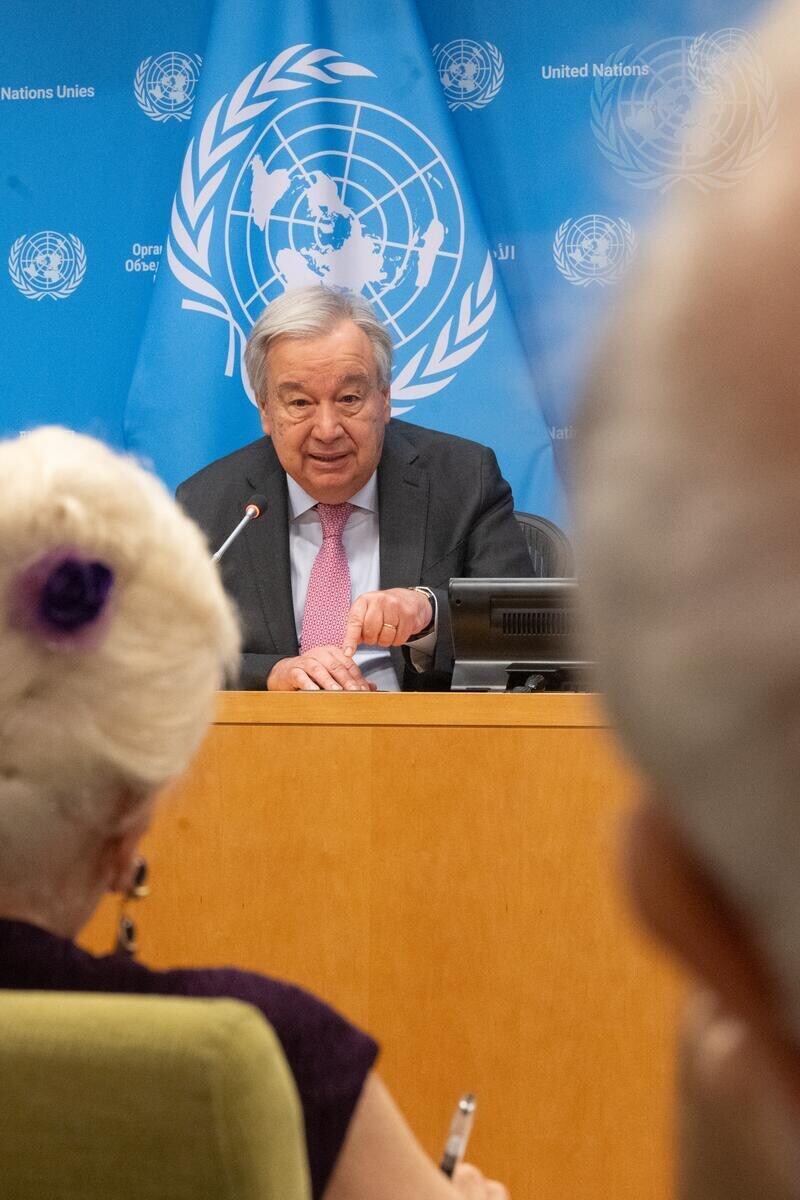

UN chief warns tech firm to ‘acknowledge the damage’ their products are causing

António Guterres, Secretary-General of the United Nations (UN), has called on technology firms to “acknowledge the damage your products are inflicting on people and communities”.

Speaking at the launch of the UN’s Global Principles for Information Integrity, he said that algorithms on social media platforms had the ability to “push people into information bubbles and reinforce prejudices including racism, misogyny and discrimination of all kinds” through opaque algorithms.

UN Secretary-General António Guterres launches new UN Global Principles on Information Integrity. Image: UN Photo/Eskinder Debebe

“You have the power to mitigate harm to people and societies around the world,” he said. “You have the power to change business models that profit from disinformation and hate”.

His comments come after several high-profile calls for social media companies to do more to protect vulnerable people, especially children.

Earlier in the month, New York passed legislation to protect children from addictive social media content. Similarly, the US Surgeon General, Vivek Murthy, called on social media apps to add warning labels reminding users they can cause harm to young people.

Writing in an op-ed for the New York Times, he said that while this would not make the platforms safe, it could increase awareness among young people and influence their behaviour.

And in the UK, a group of parents is calling on social media companies to grant access to their children’s data following their deaths. One ended their life after viewing harmful content, while another may have died after participating in a social media ‘challenge’.

Misinformation and disinformation is predicted to be the biggest global risk over the next two years. Image: World Economic Forum Global Risk Report 2024

AI boom sees Nvidia briefly become world’s most valuable company

Nvidia became the world’s most valuable company in June, overtaking Microsoft and Apple to hit a market value of $3.34 trillion. The company’s microchips are playing a key part in the development and advancement of artificial intelligence (AI) technology. Microsoft retook the top spot a few days later following a stock selloff.

Despite the fall, many investors expect Nvidia to continue rising in valuation in the future. The business has seen its stock surge 180% in 2024 alone. At the time of writing, The Guardian reported a 2.8% rise in the company’s shares in early trading on 25 June.

The company started the month by unveiling its new generation of processors – Rubin – less than three months after launching its predecessor, the Blackwell chip.

News in brief: Digital technology stories from around the world

McDonald’s is to halt testing of AI chatbots at drive-thrus. The systems, which had been implemented in over 100 US locations, featured an AI voice that could respond to customer orders. The company has not given a reason for ending its test run, but shortcomings in the technology including multiplying items or incorrectly adding items have been shared on social media.

A film credited to ChatGPT 4.0 has had its premiere cancelled. The Last Screenwriter was due to debut at the Prince Charles Cinema in London, but a backlash has seen the screening withdrawn. Speaking to the Daily Beast, director Peter Luisi said: “I think people don’t know enough about the project. All they hear is ‘first film written entirely by AI’ and they immediately see the enemy, and their anger goes towards us.”

SAP is restructuring 8,000 jobs to focus on AI-driven business areas, the company announced. It will spend €2 billion ($2.2 billion) to retrain employees or replace them through voluntary redundancy programs.

And US record labels are suing two prominent AI music generators, Suno and Udio, reports Wired. Universal Music Group, Warner Music Group and Sony Music Group filed lawsuits in US federal court, alleging copyright infringement. Speaking in a press release, Recording Industry Association of America chair and CEO Mitch Glazier said: “Unlicenced services like Suno and Udio that claim it’s ‘fair’ to copy an artist’s life’s work and exploit it for their own profit without consent or pay set back the promise of genuinely innovative AI for us all.”

More on digital technology on Agenda

Digital twin technologies have the potential to drive innovation in the industrial sector, allowing for improvements in efficiency without sacrificing productivity. See how the technology could support integrated digital ecosystems and help the energy sector in this piece.

As we face the fourth industrial revolution, better knowledge exchange between businesses and governments will help facilitate faster growth in tech-enabled industries. See how regions like Karnataka in India can become attractive locations for businesses developing AI solutions in this article.

Spatial computing, blockchain and AI have all generated excitement in recent years. But their potential is set to grow further as synergies between the technologies are realized. Learn about some of the industries already witnessing the transformative impact of these key technologies as they converge.

Article link: https://www.weforum.org/agenda/2024/07/ai-regulation-digital-news-july-2024/