Artificial intelligence and machine learning are being integrated into chatbots, patient rooms, diagnostic testing, research studies and more — all to improve innovation, discovery and patient care

The age of artificial intelligence (AI) and machine learning has arrived. And with it, comes the promise to revolutionize healthcare. There are projections that AI in healthcare will become a $188 billion industry worldwide by 2030. But what will that actually look like? How might AI be used in a medical context? And what can you expect from AI when it comes to your own personal healthcare?

Already, healthcare providers, surgeons and researchers are using AI to develop new drugs and treatments, diagnose complex conditions more efficiently and improve patients’ access to critical care — and this is only the beginning.

Our experts share how AI is being used in healthcare systems right now and what we can expect down the line as the innovation and experimentation continues.

What are the benefits of using AI in healthcare and hospitals?

Artificial intelligence describes the use of computers to do certain jobs that once required human intelligence. Examples include recognizing speech, making decisions and translating between different languages.

Machine learning is a branch of AI that focuses on computer programming. It uses extremely large datasets and algorithms to learn how to do complex tasks and solve problems similar to the way a human would.

When used together, AI and machine learning can help us be more efficient and effective than ever before. These tools are being used with thousands of datasets to improve our ability to research various diseases and treatment options. These tools are also used behind the scenes, even before patients arrive onsite for care, to improve the patient experience.

From radiology to neurology, emergency response services, administrative services and beyond, AI is changing the way we take care of ourselves and each other. In many ways, these innovations are forcing us to confront age-old questions: How can we continue to push ourselves to be better at what we already do well? And what’s left to learn as we embrace groundbreaking technology?

“AI is no longer just an interesting idea, but it’s being used in a real-life setting,” says Cleveland Clinic’s Chief Digital Officer Rohit Chandra, PhD. “Today, there’s a decent chance a computer can read an MRI or an X-ray better than a human, so it’s relatively advanced in those use-cases. But then at the other extreme, you’ve got generative AI like ChatGPT and all sorts of cool stuff you hear about in the media that’s fascinating technology, but less mature. The potential for it is there and it’s also quite promising.”

To that end, Cleveland Clinic has become a founding member of a global effort to create an AI Alliance — an international community of researchers, developers and organizational leaders all working together to develop, achieve and advance the safe and responsible use of AI. The AI Alliance, started by IBM and Meta, now includes over 90 leading AI technology and research organizations to support and accelerate open, safe and trusted generative AI research and development. Cleveland Clinic will lead the effort to accelerate and enhance the ethical use of AI in medical research and patient care.

An example of Cleveland Clinic’s commitment to AI innovation is the Discovery Accelerator, a 10-year strategic partnership between IBM and Cleveland Clinic, focused on accelerating biomedical discovery.

“Biomedical research is changing from a discipline that was once exclusively reliant on experiments in a lab done on a bench with animal models or biological samples to a discipline that involves heavy and fast computational tools,” says the Accelerator’s executive lead and Cleveland Clinic’s Chief Resource Information Officer Lara Jehi, MD.

“That shift has happened because the data we now have at our disposal is way more than what we had even just 10 years ago,” she continues. “We can now measure in detail the genetic composition of every single cell in the human body. We can measure in detail how that genetic composition is translating itself to proteins that our body is making, and how those proteins are influencing the function of different organs in our body.”

AI and machine learning are being integrated into every step of the patient care process — from research to diagnosis, treatment and aftercare. And that means the field of healthcare is forever changing. These kinds of changes require new approaches to medical science and new skill sets for incoming nurses, doctors and surgeons interested in working in the medical field.

How fast is this technology moving? If we took our understanding of how the human body worked just 10 years ago and compared it to our understanding of how it works today with our new AI measurement tools, Dr. Jehi says that we’d have a completely different outlook on how the human body works.

“The advances in AI would be like taking a fuzzy black and white picture from the 1800s and comparing it to one from an iPhone 14 Pro with high definition and color,” she illustrates. “This is the difference with the scale and the resolution of the data that we have to work with now.”

So, what does AI and machine learning use look like in practice? Well, depending on the area of focus, medical specialty and what’s needed, AI can be used in a variety of ways to impact and improve patient outcomes.

Diagnostics

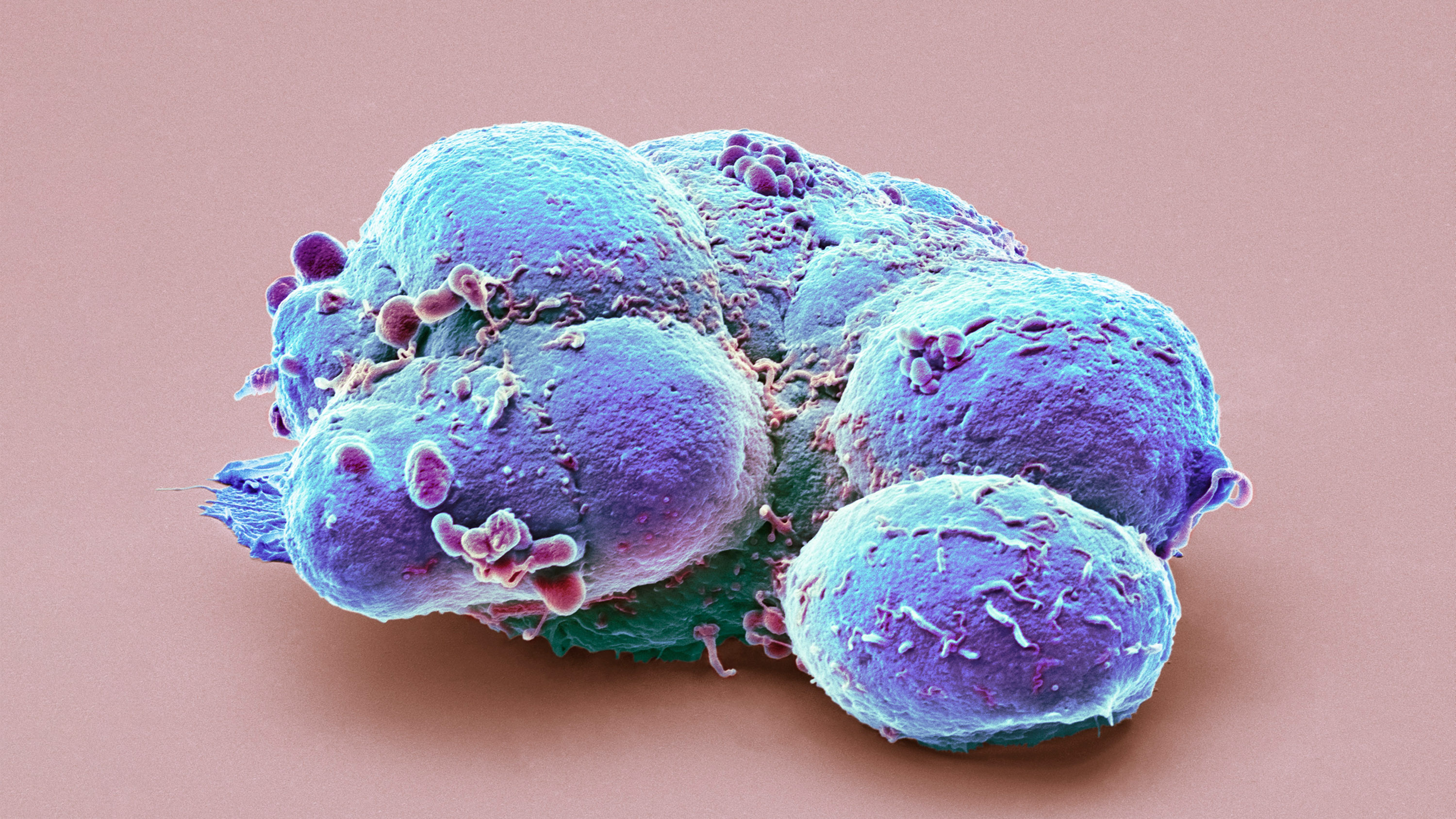

Broken bones, breast cancer, brain bleeds — these conditions and many others, no matter how complex, need the right kind of tools to make a diagnosis. And often, a patient’s journey depends on receiving the right diagnosis.

“In radiology, technology and computers are used every day by doctors to identify diseases before anyone else,” shares diagnostic radiologist Po-Hao Chen, MD. “In many cases, a radiologist is the first one to call the disease when it happens.”

But how does AI fit into diagnostic testing? Well, let’s revisit the definition of machine learning.

Let’s say you show a computer program a series of X-rays that may or may not show bone fractures. After reviewing those photos, the program tries to guess which ones are bone fractures. When it gets some of those answers wrong, you give it the correct answers. Then, you feed it another series of X-rays and have it rerun the program again with that new knowledge. Over time, the program gets better at identifying what’s a bone fracture and what’s not. Each time this process occurs, it’s able to make those decisions faster, more efficiently and more effectively.

Now, imagine that same process, but with hundreds or thousands of other datasets and other conditions. You can probably see how AI can help pinpoint and identify findings with the help of a radiologist’s expertise.

“It works like a second pair of eyes, like a shoulder-to-shoulder partner,” says Dr. Chen. “The combined team of human plus AI is when you get the best performance.”

The radiologists of the future will have a very different skill set compared to radiologists who excel today, notes Dr. Chen. And that future skill set will involve a significant portion of AI know-how.

“It wasn’t that long ago when almost all radiology was done on physical film that you held in your hand,” he adds. “As radiology became computerized, doctors had to enhance their skill set. AI is changing digital radiology the same way digital radiology changed film.”

Breast cancer

Breast cancer radiology has shown promising results using AI, according to breast cancer radiologist Laura Dean, MD.

“Everyone’s breast tissue is like their fingerprint or their handprint,” she clarifies. “In other words, breast cancer can look very different from one patient to another. So, what we look for are very subtle changes in the appearance of the patient’s own breast pattern. This is where we are really seeing an advantage of using AI in our interpretations.”

Breast cancer experts widely agree that annual screening mammography beginning at age 40 provides the most life-saving benefits.

“In breast imaging exams, we’re looking to see if the patterns in someone’s breast tissue look stable. A very important part of mammography interpretation is pattern recognition,” explains Dr. Dean. “Are there areas that are new or changing or different? Are there areas where the tissue just looks a little bit different or is there a unique finding in the breast?”

It’s up to the radiologist to review the 3D images and search for areas of density, calcifications(which can be early signs of cancer), architectural distortion (areas where tissue looks like it’s pulling the surrounding tissue) and other areas of concern.

“A lot of cancers are really, really subtle. They can be really hard to see, depending on the patient’s breast tissue, the type of breast cancer, how the tissue is evolving and how the cancer is developing,” Dr. Dean notes. “If every breast cancer were one of those obvious textbook spiculated masses with calcifications, it would make my job a lot easier. But as our technology continues to improve, many of the cancers we’re seeing now are really, really subtle. Those subtle cancers are the areas where I think AI has shown a lot of promise.”

There are now several AI-detection programs available for use in mammography. The first one to get approval from the U.S. Food and Drug Administration is iCAD’s ProFound AI, which can compare a patient’s mammography against a learned dataset to pinpoint and circle areas of concern and potential cancerous regions. When the AI identifies these areas, the program also highlights its confidence level that those findings could be malignant. For example, a confidence level of 92% means that in the dataset of known cancers from which the algorithm has trained, 92% of those that look like the case at hand were ultimately proven to be cancerous.

“The first step is identifying the finding, and then, using all of my expertise and my diagnostic criteria to determine if it’s a real finding,” explains Dr. Dean. “If it’s something that I think looks suspicious, then it warrants diagnostic imaging. We bring the patient back, do additional diagnostic views and try to see if that finding is reproducible — can we still see it? Where is it in the breast? And then, we have other tools such as targeted ultrasound where we would home in right on that area and see if there is a mass there, what the breast tissue looks like and then do a biopsy if needed.”

One benefit of AI programs is that they can function like a second set of eyes or a second reader. It improves the overall accuracy of the radiologist by decreasing callback rates and increasing specificity.

“We are seeing that the AI can guide the radiologist to work up a finding they might not have otherwise seen,” she says.

That’s especially important when you consider that earlier detection is crucial to helping identify cancers at the lowest possible stage, especially for aggressive molecular subtypes of breast cancer. Earlier detection may also help decrease the rate of interval cancers, or those that develop between mammogram screenings.

“I think it’s really beneficial to look at how AI is helping in the so-called near-miss cases. These are findings that are really hard to see for even a very experienced radiologist,” he continues. “In general, radiologists should be calling back less with the help of AI. And that’s the point: AI helps us tease out which cases are truly negative and which cases are truly suspicious and need to come back for further testing.”

Triage

Improving access to patient carecan be critical, especially for emergencies. While we continue to work against bias in healthcare, AI is being used to triage medical cases by bumping those considered most critical to the top of the care chain.

“We do it on a disease-by-disease case,” says Dr. Chen. “We identify diseases that need to be caught as early as possible and then we develop or bring in technology to do that. One instance we’re doing that is with stroke.”

Stroke

Time is brain tissue — so every minute counts when someone is having a stroke.

“It’s not all or nothing. It’s a process that happens over time,” explains Dr. Chen. “The problem is that that timeframe is measured in minutes. Every minute that a patient doesn’t receive care or doesn’t receive intervention, a little bit more of their brain becomes irreversibly damaged.”

And that’s especially true when you have what’s called a large vessel occlusion, a kind of ischemic stroke that occurs when a major artery in the brain is blocked. That kind of stroke is treatable if it’s discovered in the right amount of time.

Now, out in the field, if EMS gets a call that they’re dealing with a possible stroke, they have the capability to trigger a stroke alert. This alert sets off a cascade of management events that prepares a team for a patient’s arrival and treatment plan — available surgeons are alerted, beds are made available, rooms are prepped for surgery, and so on.

“We add AI to the front end of that process,” he further explains. “When patients who have a suspected stroke receive a scan, AI now reviews those images before any human has an opportunity to even open the scan on their computer.”

As soon as the brain scan is taken, the image is sent to a server where the program, Viz.ai, analyzes it fast and efficiently using its neural network to arrive at a preliminary diagnosis.

“The AI is cutting down precious minutes by being the first and fastest agent in this process to review those images,” says Dr. Chen. “If you can find a patient that’s having a stroke that can be treated, then it makes absolute sense to do everything you possibly can do to mobilize resources to treat it.”

If a large vessel occlusion is found, the program begins coordinating care. It’s integrated into scheduling software, so it knows who’s on call and which doctors need to be notified right away.

“The AI software kicks off a series of communications to make sure everyone in the chain — all the doctors, neurosurgeons, neurologists, radiologists and so on — are aware that this is happening and we’re able to expedite care,” he continues.

Complex measurements

A patient’s journey often doesn’t begin and end with diagnosis and treatment. Often, the journey involves watching, waiting and revisiting a diagnosis. For example, in the case of lung cancer, it’s common for oncologists to begin tracking the growth of nodules before they’re proven to be cancerous.

“That’s the whole point of doing screening programs,” says Dr. Chen. “The ones that grow are more likely to be cancer. The ones that don’t grow are more likely to be benign. That’s why they’re important to track over time. And most of that work is done manually by trained radiologists who go through every nodule that they can see in the lung. They track it, measure it and report on it.”

That kind of work can be tedious and time-consuming. That’s why it’s a focus area for using AI.

“We are actively looking at and trying to deploy a solution that can do the detection and measurement of these nodules in the lung automatically,” he adds. “That would help with the consistency and reproducibility of those measurements now with different kinds of cancer.”

Managing tasks and patient services

Like the scheduling software, AI is being utilized in small and large ways to free up physicians’ time behind the scenes and to help increase patients’ access to care. In his 2024 State of the Clinic address, Cleveland Clinic’s CEO and President Tom Mihaljevic, MD, highlighted several practical areas AI is already being used both in and out of the exam room. Among them:

- An AI-powered chatbot can provide answers to common patient concerns. It can also help with scheduling and pulling up their previous medical history, past scheduling appointments, medication lists, previous doctors they’ve visited and so on.

- To cut down time on how many notes a provider needs to take during an appointment, a continuous learning AI program will use ambient listening to tune in to conversations between patients and their healthcare providers. This program can capture important notes, create visit summaries, assist with paperwork and generate instructions for prescription medications that the provider orders.

Broadly speaking, AI can also be beneficial when it comes to virtual appointments. Studies show that AI monitoring tools have been beneficial when it comes to seeing if patients are using medications like inhalers or insulin pens the way they’re prescribed and providing much-needed guidance when questions arise.

The future of AI in healthcare

The future of AI in healthcare, notes Dr. Jehi, is perhaps brightest in the realm of research.

“I’ve learned throughout this process that there is a lot more to be learned by using AI,” she says.

As an epilepsy specialist, Dr. Jehi researches how machine learninghas changed epilepsy surgery as we know it.

Traditionally, if a patient with epilepsy continues to have seizures and isn’t responding to medication treatment, surgery becomes the next best option. As part of the surgical procedure, a surgeon would find the spot in the brain that’s triggering the seizures, make sure that spot isn’t critical for their functioning and then safely remove it.

“The way we used to make those decisions, we’d do a bunch of tests, we’d measure brainwaves, we’d take a picture of the brain, we’d look at how the radiologist or the EEG doctor interpreted the results, and then, we’d take the test results,” she shares. “Based on our own human experience, we’d decide if we want to do the surgery or not. But we were very limited in our ability to build collective knowledge.”

In essence, Dr. Jehi explains that doctors were stuck in a vacuum. They knew the expertise they’d gained over the years had been valuable on an individual level, but without looking at the bigger picture, it was hard to tell who would respond best to which surgical technique if they were coming in as a first-time patient.

Now, machine learning has filled in that gap in collective knowledge by pulling together all this patient data and distilling it down into one location. Doctors can access that information all in one place and use it to research the disease and the effectiveness of different treatment options, and use that information to inform their practice.

“From the patient perspective, nothing really much changes. They’re still getting the tests that they need for the clinical decision to be made,” she enthuses. “That is the beauty of what AI offers. It’s a task for us to get exponentially more insight from the same type of clinical data that we always had but we just didn’t know what to do with. AI is allowing us to deep dive into those tests and get more insights than just what our superficial initial interpretation was.”

Currently, Dr. Jehi is working to improve specialized AI predictive models that can accurately guide medical and surgical epilepsy decision-making.

“We are doing research to come up with a way to reduce these complex AI models to simpler tools that could be more easily integrated in clinical care,” she notes.

Dr. Jehi and other researchers have also identified biomarkers with the help of machine learning that determine which patients have a higher risk for epilepsy reoccurring after having surgery. And work is currently being done to fully automate detecting and locating brain segments that need to be removed during epilepsy surgery.

Right now, Dr. Jehi is focusing on understanding how a patient’s genetic composition and brain plays into their epilepsy. How do they respond to epilepsy based on a number of factors? How do they respond to epilepsy surgery? And are these factors related to how well their surgery works down the road?

“We’ve been completely overlooking how nature works,” she says. “Until now, we haven’t really analyzed how the genetic makeup of individuals factors into all of this. With my research, we have a lot of evidence that makes us believe that genetic makeup is actually quite important in driving surgical outcomes.”

With AI and machine learning, Dr. Jehi hopes to continue pushing this research to the next level by looking at increasingly larger groups of patients.

Our AI journey

As we continue to improve our understanding of AI and further our pursuit of innovation and discovery, it’s up to healthcare providers around the world to question how best to utilize the tools at their disposal. Already, the World Health Organization (WHO) has issued additional guidelines for safe and ethical AI use in the healthcare space — a continued effort that builds off their original 2021 guidelines but with added caution around large language models like ChatGPT and Bard.

But when AI is used to further research and improve patient care with ethics and safety as the foundation of those efforts, its potential for the future of healthcare knows no bounds.

“I see AI as a path forward that helps us make sure that no data is left behind,” encourages Dr. Jehi. “When we’re doing research and we’re developing a new predictive model, or we want to better understand how a disease progresses, or we want to develop a new drug, or we want to just generate new knowledge — that’s what research is. It’s the generation of new knowledge. The more data that we can put in, the more our chances are of finding something new and of those things actually being meaningful.”

Article link: https://health.clevelandclinic.org/ai-in-healthcare?