AI technologies have had an undeniable impact on the information landscape.

If deployed responsibly, AI can facilitate access to accurate, reliable information, foster free expression, and contribute to healthy and vibrant information ecosystems.

But how do we make this vision a reality?

The UN Security Council took up these questions in a recent informal meeting on “Harnessing safe, inclusive and trustworthy AI for the maintenance of international peace and security.”

One of the focus areas of the meeting was AI’s impact on information integrity.

Two key risks were highlighted:

1. The role of AI in curating and creating information, and people’s growing reliance on this technology to shape their understanding of the world.

At present, tech companies continue to integrate AI into our everyday applications at breakneck speed but genAI tools cannot uniformly be relied on as sources of accurate information.

Ongoing studies and testing show that they frequently do not distinguish between rigorous science on the one hand and dirty data or outright nonsense on the other.

And yet, people are increasingly accessing this convenient and flawed data without being equipped to assess its veracity or reliability. This can contribute to a deeply concerning trend: thelack of trust in any information source and in the information ecosystem more broadly.

People just don’t know what is real and what to believe.

At pivotal societal moments and especially in fragile settings, this can feed into instability and unrest, sometimes with disastrous consequences.

We are, in effect, guinea pigs in an information experiment in which the resilience of our societies is being put to the test.

2. The misuse of AI in facilitating the creation and dissemination of false and hateful information at scale.

This can affect the peace and security of communities and countries.

GenAI tools have made it immeasurably easier for a broad range of actors to spread false claims for financial or strategic gain.

It now costs next to nothing to quickly and easily create a flood of convincing lies and hate, with minor human intervention.

To target specific groups and individuals, actors draw on our personal data that has been sold as part of the advertising supply chain.

Tactics like this can trigger diplomatic crises, incite unrest, and undermine understanding of realities on the ground.

AI-generated deep fake images, audio and video have been weaponized in conflicts from Ukraine, to Gaza, to Sudan.

We’re also now seeing AI used openly and explicitly to generate content designed to undermine social cohesion.

Content that demonises or dehumanises women, refugees, migrants, and minorities.

Content that is anti-Semitic, Islamophobic, racist and xenophobic.

The UN has experienced grave impacts on our missions and priorities.

Peace and humanitarian operations are targeted with false and hateful narratives, negatively affecting the safety and security of UN personnel and making the tasks of implementing their mandates harder.

In turn, this is impacting communities small and large, jeopardizing their stability.

What are some of the concrete steps needed to address these areas of risk?

The UN system has been ramping up efforts.

This includes the 3R approach:

research, risk assessment and response.

Through research we can better understand information risks in complex, multiplatform, multilingual environments.

Our work includes outreach with affected communities to hear and understand their perceptions and concerns.

UN entities then assess the risk posed to UN mandate delivery and thematic priorities.

We use this insight to design appropriate mitigation and response measures.

Most immediately, this means quick and proactive communication to address information voids.

This can be done through our own channels and also via coalitions with community leaders, influencers and civil society groups.

Of course, these efforts require resources, including for skills training and technology.

We also continue to support the efforts of the international community, including through our work to implement the UN Global Principles for Information Integrity.

The Principles provide a framework for action to strengthen the information ecosystem — and are a resource to UN member countries in meeting the commitments agreed in the Global Digital Compact.

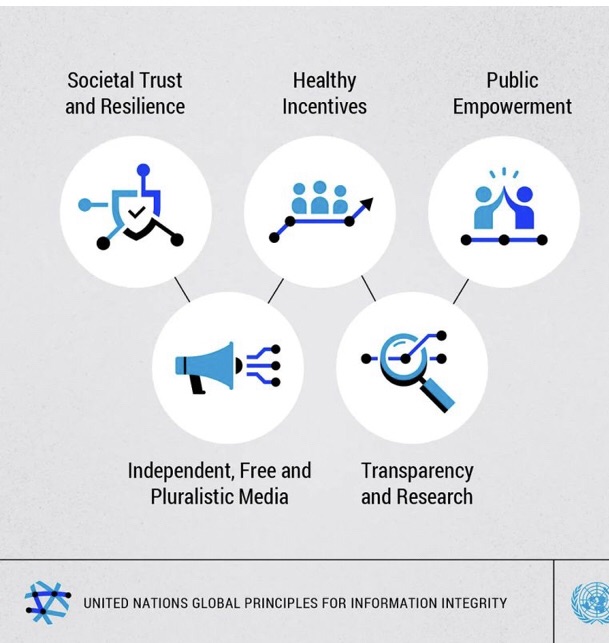

The Principles contain recommendations for a wide range of stakeholders around five pillars: societal trust and resilience; healthy incentives; public empowerment; independent, free and pluralistic media; and transparency and research.

The United Nations Global Principles for Information Integrity

Three key recommendation areas are highlighted below.

1. Countries play a central role in shaping information spaces, beginning with obligations to respect, protect and promote human rights, in particular the right to freedom of expression.

This means that regulatory measures to address information integrity comply with applicable international law and are carried out with the full participation of civil society.

Freedom of expression requires not only that people are able to express their views, but that they are able to seek and receive ideas and information of all kinds.

It is exponentially harder to do this when you’re in a polarized, opaque information environment crowded with lies and hate.

In such a landscape, guardrails allow for more free speech, not less, and protect people who feel unsafe in online spaces, giving voice to those otherwise silenced.

Guardrails can help enable inclusive access to information.

And they can support and encourage innovation and help foster public trust in fast-emerging AI technologies.

There is growing awareness among the communities the UN serves that the sooner guardrails are established, the less the risk for us all.

2. Information integrity is not possible without a free, independent and pluralistic media.

Professional journalism requires considerable investment. Yet the advertising-driven business model which long supported independent media has drastically eroded.

AI has prompted new concerns. Quality journalism is being scraped and summarized or used to train AI without permission or compensation.

GenAI search summaries replacing standard search results can reduce web traffic to news sites, further affecting revenue streams.

Many news outlets, particularly at the local level, just can’t compete. For them it is not just a matter of relevance. It is a matter of survival.

The result is news deserts. The vacuum is filled by AI-generated articles or downgraded versions of journalistic content, or malicious content imitating news.

This can be particularly destabilising in conflict and crisis zones.

Responses must therefore urgently support sustainable business models for public interest media.

They must also ensure better protection for media workers, along with researchers, academics and civil society, who are under attack around the world.

3. There is an urgent global need for public empowerment in the information ecosystem, including through measures to boost resilience.

Media and digital literacy skills can allow people to navigate information spaces safely and effectively.

Countries can prioritize the literacy needs of groups in vulnerable and marginalized situations — who are often those most adversely affected by information risks.

They can also undertake literacy efforts around specific problems related to AI, keeping up with new and emerging technologies

Public awareness must improve around online rights, how AI works, and how personal data is used.

The urgency of the challenge requires multi stakeholder coalitions with collaborations between countries to support capacity building and increase global resilience.

This is the only viable path to an information ecosystem in which AI innovation is harnessed for information integrity, human rights and international peace and security.

The text above is adapted from a briefing to the Security Council by Charlotte Scaddan, Senior Adviser on Information Integrity, UN Global Communications

Article link: https://medium.com/we-the-peoples/information-integrity-in-the-ai-age-51494645e765